Summary

If I were to choose one overarching philosophy for how software should be developed, it would be to program for correctness — that is, do everything practical to reduce errors.

Introduction

There are many attributes we may want in geophysical software:

- Developed quickly

- Runs quickly

- Easy to use

- Well documented

- Correct

- Maintainable

- Portable

- Feature rich

- Technically advanced

All of these are worthy goals, but if you had to pick one to be the first priority in your software-development strategy, which would it be?

My choice would be correctness. Without this, all other items are moot. What good is software that is easy to use or runs fast if it produces the wrong answer? But in a way we don’t have to choose. Programming for correctness will with time improve other items on the list, foremost the speed of development and maintainability.

But a note on what I am not referring to. “Programming for correctness” is often associated with formal proofs of software correctness. While this field attracted much attention in the early years of computer science, it hasn’t had noticeable impact on the average programmer, and I suspect it never will.

So what do I mean by correct software? In day-to-day programming, it might mean software that:

- Compiles.

- Completes normally for at least one set of input.

- Completes normally for common forms of input.

- Completes normally for all valid input.

- Gives the correct answer for at least one set of valid input.

- Gives the correct answer for common forms of valid input.

- Gives the correct answer for all valid input.

- Gives the correct answer for all valid input, and properly handles all invalid input.

Too often developers achieve level 3 and declare the software to be working. We should set our sights higher.

Software correctness is particularly important for researchers. How many excellent ideas were abandoned because they weren’t implemented correctly, coding errors being mistaken for weaknesses in the algorithm? And there are many cases of new software working great for the data set it was developed with, but never giving good results after. Were there hardwired assumptions in the code that made it incorrect for all but the first data set?

But writing correct software is difficult. To achieve it, you must use every tool at your disposal, coming at the problem from many directions at once. Even then, you will not eradicate all errors — that is probably not possible. Improvement, not perfection, is the aim here.

Programming allows you immense freedom, but you must not use it. Writing quality software requires method and discipline so as not to make an enormous mess. This is called “software engineering”, a term coined in the 1960s when people realized that software was far harder to develop and maintain than they initially imagined.

The early literature on software engineering was accessible and rewarding to the everyday programmer, and is still worth reading today. In particular, structured programming (limit yourself to certain control structures, and avoid others like the dreaded “go to”), structured design (build modules with high cohesion and low coupling), and structured analysis (design and analyze software systems by how data flows through them) were all easily applied, and significantly improved the quality of software.

By the 1980s, software engineering had blossomed into a full-blown academic field, and the literature became increasingly abstract and decreasingly relevant to the everyday programmer. Some of it was certainly valuable for the architects of large systems, but to most programmers, software engineering was morphing into “Here are the 172 things you must do before you write a line of code”. In the last two decades, this trend has reversed somewhat with the introduction of light-weight styles such as agile development. Regrettably, this has sometimes been used as an excuse to return to undisciplined hacking.

My interest has always been in the practical everyday things that programmers can do to improve their products, without burdening them with mind-numbing bureaucracy. So how can you improve the correctness of code? Here are some ideas:

- Modularity

- Assertions

- Testing

- Write it twice

- Code reading

- Libraries

- High-level languages

- Automatic code analysis

- Source configuration management

Modularity

Without modularity — that is to say, writing software in small independent functions or classes– forget it. You have little chance of producing sound software. Modularity is the foundation for quality.

There are three main styles for sound modular development:

- Structured design

- Object-oriented programming

- Functional programming

Structured design says you develop modules with high coherence and low coupling. High coherence means that a module is singular in purpose. It does one thing, with no side effects that are detectable by the rest of the software. Low coupling means that a module communicates with the rest of the software in a way that is simple, minimal, and through formal interfaces. Enemy #1 in coupling was the Fortran COMMON block, or in C the static variable.

The second approach is object-oriented programming. When it first became popular in the 1990s, some over-excited fans claimed that it was an utterly different way of thinking, and that companies should fire their old programmers because their minds were forever warped by past habits. This was a bone-headed suggestion, and I hope the advice was not followed. Those who practiced structured design recognized that object orientation was a natural extension of it. Such people were likely to make fine object-oriented programmers.

Just because you work in an object-oriented language and regularly define classes doesn’t mean you are an object-oriented programmer. Sound object-oriented design produces many small classes whose member functions have simple interfaces and serve to hide data and decisions from the rest of the software. Abstraction — “programming to an interface” — is the order of the day. Too many programmers, however, write massive, complicated classes whose long lists of data members, freely and directly accessed by large blocks of code, are indistinguishable from Fortran COMMON blocks.

I strongly recommend object-oriented over structured design, but either approach is fine provided you do it well.

There is a third wave of software methodology crashing on our shores: functional programming, an old idea that is gaining wide-spread attention. It has some appealing features that, according to many, enhance correctness. Early reviews suggest that some hybrid of object-oriented and functional programming will be the development method of choice for the future. There are already languages like Java and Scala supporting this approach.

Assertions

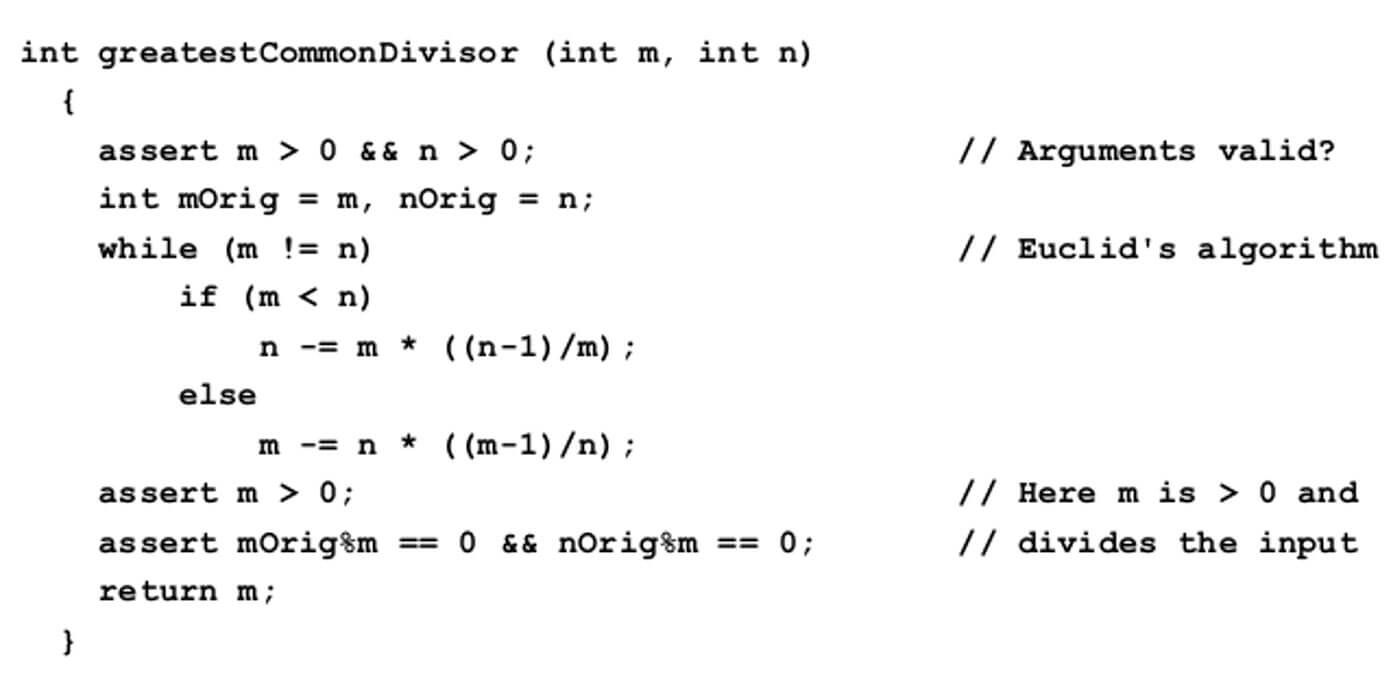

An assertion causes a program to abort if a Boolean expression evaluates to false, ensuring that a certain condition holds at a certain point in the code. The most common use is to check that function arguments have allowable values (preconditions), in effect testing every piece of code that invokes that function. Less common is to check that a block of code gives the expected output (postconditions), or that certain conditions hold after every iteration of a loop (loop invariants). Figure 1 shows a Java function calculating the greatest common divisor of two integers. Assertions provide a safety net for this short but unintuitive algorithm.

Few things are as simple and powerful as assertions. The concept has been greatly expanded, and is an integral part of “design by contract”. It is well worth studying in detail. But a warning. Assertions are designed to catch coding errors. Problems with user or data input should be handled in a more controlled and user-friendly manner.

One of the sillier ideas is that assertions should be turned off once the code is in production, on the assumption that the code is now correct and assertions just slow things down. Nonsense on both counts. Released software is almost never bug-free. In fact, production often reveals new problems that were missed during testing. Further, assertions rarely take a significant amount of run time. Most require trivial computation, as in the example above. The alternative to triggering an assertion in production is having the program crash somewhere in the code well removed from the error, or give incorrect results, perhaps without being detected. Neither is an inviting prospect.

Testing

The purpose of testing is to find as-yet-undiscovered deficiencies in the software. It can be broken down into three main types:

- Unit (Are there errors in a class or function?)

- Integration (Are there errors in how classes or functions work together?)

- System (Does a program or system fail to meet specifications?)

These are huge topics that cannot be adequately covered here, but if I were to recommend a place to begin, it would be with unit and integration testing supported by frameworks like cppunit or junit. The impact on the quality of software and speed of development is immense. It is not possible to produce quality software without extensive unit tests. These returns compound when you start designing software so that it can be easily unit tested.

Write it twice

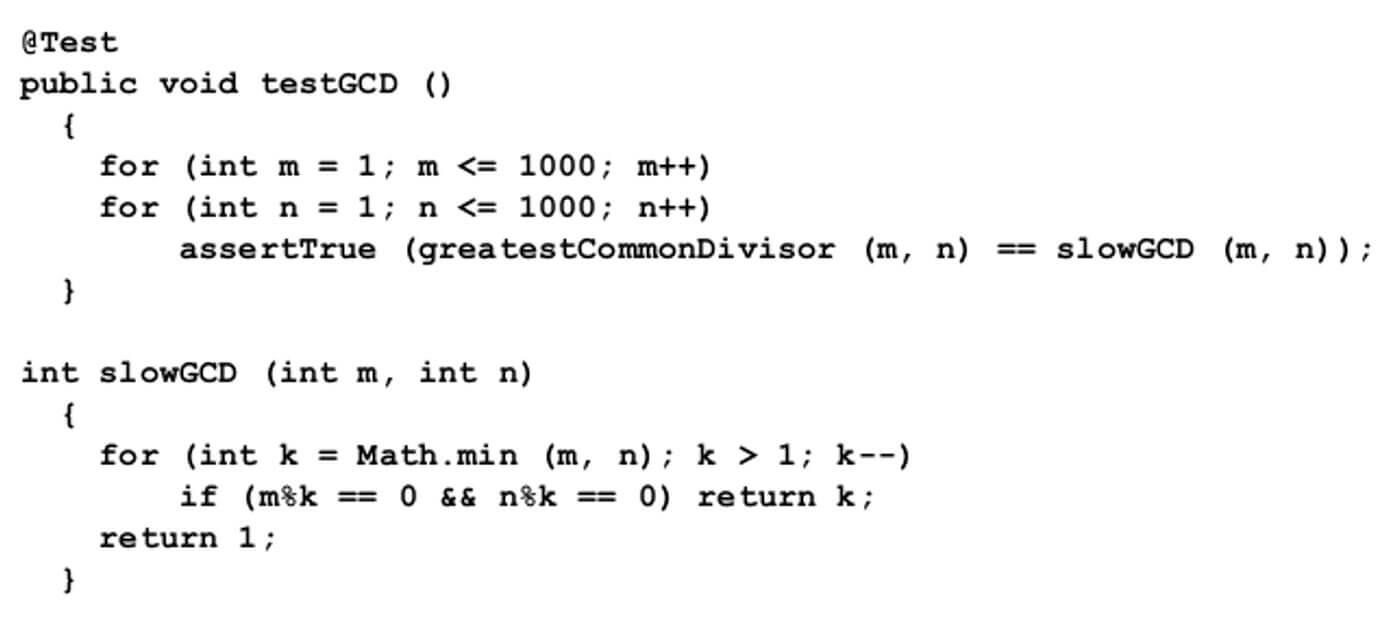

Suppose you have written a fast but complex algorithm. How do you test it? Write a slow brute-force version, which is often easy to do. Then compare the two using thousands or millions of generated test cases.

In Figure 2 I use the junit framework to test the greatest common divisor function shown in Figure 1 against a brute-force version. Between unit testing and the assertions within the function, we can be confident that this fast but inobvious algorithm is correct.

Code reading

Testing isn’t enough. There are many errors that you could test all day and never detect. You just didn’t think to try them. Reading code was something early programmers learned to do well. Computer time was scarce, so they inspected their code closely before wasting machine resources.

The best time to read code is first thing in the morning the day after writing it, when you’re relaxed and patient and can view the code with fresh eyes. Reading code in printed form gives a different perspective than viewing it on a screen. You will almost always find improvements.

But first, the code has to be written in a style meant to be read. Modularity is critical. An 800-line function is near impossible to comprehend. A collection of small classes or functions with simple, well-defined purposes and interfaces is intellectually manageable.

Libraries

The surest way to avoid new bugs is to not write new code. Reusable software libraries — both external and those you develop yourself — are a God-send for correctness and speed of production. Every programmer knows this. Very few act like they know this. The exigencies of the current project never seem to allow time to bring in outside software, or develop reusable libraries yourself. And let’s face it — developing high-quality reusable code that’s easy for others to understand and use is difficult and time consuming. The payoff, however, is enormous. Anytime you find yourself writing or copying over the same code repeatedly, ask yourself if it belongs in a library. If the answer is yes, do it.

High-level languages

Programming languages are improving. Ever more tasks that the programmer had to handle are now being done by the language itself. Writing container structures and sorting-related algorithms mostly disappeared with the introduction of C++’s Standard Template Library. Memory leaks, uninitialized memory, and undetected array-bound violations — constant irritants in C and C++ — mostly disappeared with Java. People noticed how a few pipelined Unix commands, often written on a single line, could do the work of hundreds of lines of C code, inspiring the development of TCL, Perl, and the cascade of scripting languages that followed. Mathematical languages such as Matlab and Maple let us treat vectors and matrices more like the abstract entities that they represent rather than as arrays of numbers that must be manipulated element by element.

We need to program at a higher level in languages naturally suited to the application, leaving tedious, error-prone details for the language to handle.

Automatic code analysis

Most popular languages have freely available automatic tools that can critique your code, identifying possible problems or improvements with little effort on your part. It’s like having a really sharp programmer reviewing your code for free. Typically the lower the level of the programming language, the more automatic analysis you need. With Fortran, C, and C++, for example, it’s near essential for quality software.

There are two types of code analysis: static, which inspects your source code, and dynamic, which finds problems during execution. Both are worth having. Profiling is a type of dynamic analysis which identifies where CPU and other resources go. Although its principle aim is to help you speed up execution, it’s surprizing how often profilers find programming errors.

Source configuration management

One of the greatest sources of errors is not knowing what software is on the system and how it has changed. Often software problems get solved but not put in production because the programmer failed to update the system properly. And many times a new bug has appeared and the programmer is left wondering… “This used to work, so what happened? Did the system change?”

Source configuration management systems help solve these problems. If you don’t have one, or if you have one but it’s not used consistently, or it is not inextricably tied to production releases, you need to make changes.

Finally…

Geophysical software is often written by people with backgrounds in the earth sciences or engineering rather than computer science. As a result, they often have few software-engineering skills. If that’s true in your case, don’t fret. Pick a single idea from above that seems inviting and run with it. Become an expert by practicing and learning about it. Spread the methodology to your associates. Then, perhaps many months later, pick another idea and run with that.

Join the Conversation

Interested in starting, or contributing to a conversation about an article or issue of the RECORDER? Join our CSEG LinkedIn Group.

Share This Article