A number of articles have appeared in the Herald and other publications related to the newly opened HPC (High Performance Computing) Centre. Since one of the main applications of this computer indicated in the articles is seismic data processing, the CSEG Executive asked the RECORDER Editors to talk to Roy Lindseth and ask him to write an article on what the Centre does and how he sees it being used by our industry. The following article was submitted by Roy in response to this request.

SUPERCOMPUTING

The invention of the 8086 computer chip in 1978 was the marvel of its time. With 29,000 transistors crammed into a few square centimetres, and capable of executing almost a half-million instructions per second (MIPS), it provided power equal to some of the standard computers of the time at a fraction of the cost. So successful was that technology that it changed the world economy and the way it operates, and brought to kneel the mightiest of companies.

Today it would be difficult to give away an 8086 computer. Every four years since then, a completely new chip has been introduced. The 80386, which has 275,000 transistors and can run at about 10 MIPS has become the standard of the industry, although most people looking for performance today would buy a 486. For a few hundred dollars more than the 386, with over one million transistors the 486 can provide 50 MIPS of speed.

Perhaps even more important than speed, each generation of chip provides increased features and capabilities, and to be truly successful requires most of the other computer components to be upgraded and redesigned as well. After all, it really doesn’t help to have a computer chip run 50 times faster if the rest of the hardware cannot handle 50 times the data throughput. Then, often all the software must be updated. Software originally designed for 8-bit data handling does not do well operating with 32- or 64-bit bus technology.

The limited capacity of the software industry to fully exploit new technology is certainly one reason for the fact that the 486 chip, although first introduced in 1989, has not become widely popular until recently. Technically, the 486 has already been made obsolete by the introduction this year of the Pentium, or 586 chip, with 3.1 million transistors and well over 100 MIPS performance. In fact, the 486 will probably be the processor of choice for the next 2 or 3 years, until the software industry catches up, the price comes down, and users can justify scrapping large amounts of hardware and software for which they paid top price only a short time ago.

For the moment, the Pentium (and its competitors) will find a home in the power-hungry systems, such as graphics and CAD, in high-end servers and technically superior operations, and will be eagerly acquired by the visionaries, the developers of tomorrow’s superior technologies, and the early users of advanced technologies.

The attention grabber in all this is the fact that the supercomputer of a few years past is now available in a desktop computer. Currently, it takes about 15 years for the supercomputer power of a given time to move down to the desktop. However, in that same time, supercomputer technology moves along as well, so the power ratio between small and large remains about the same.

In fact, the term “supercomputer” is merely a relative term, usually signifying a class of the ten or so most powerful computers available on the market at any given time, and the race for superior performance at this level is no less dramatic than it is for desktop machines. The power of supercomputers (as with any class of computers) advances steadily and substantially with time.

In spite of the rapid increase in power, the cost of a given class of computers has remained about the same, with the base price of the cheapest practical computer at about $1000, and of the most expensive at about $10 million. Supercomputers are also normally about 1,000 times more powerful than desktop machines.

THE CALGARY HPC SUPER-COMPUTER INSTALLATION

An example of such a supercomputer installation is the HPC High Performance Computing Centre, headquartered in the Canterra Tower in Calgary, which has recently installed a Fujitsu VXP-240/l0 Vector Supercomputer, a 2.5 Gigaflop machine with 512 Megabytes of Main Memory and a Gigabyte of auxiliary System Storage Memory (SSU). It brings some of the very latest technology, for which vector software is generally available, to Calgary.

While certainly not the largest installation in Canada (a much larger machine at the Atmospheric & Environmental Sciences Centre in Quebec is dedicated to weather forecasting) it is the largest publicly available Canadian commercial installation, and is world class in size, power, and technology. It is currently listed as No. 143 in world ranking, which might seem low until one learns that there are over 250 such installations in Japan alone.

The VXP 240/10 features both Vector and Scalar processors, operating under UXPjM software, a Unix System V Release-4 Operating System, making the supercomputer highly accessible to modem software developments and making it relatively easy to port software from other Unix systems. Special optimizing compilers for Fortran and C languages help to adapt application software to exploit the full power of the vector architecture for engineering and scientific programs.

The University of Calgary is already a substantial user of the HPC High Performance Computing Centre. Supercomputers are increasingly a basic tool for research, and many investigations which once required lengthy and tedious laboratory experiments on physical equipment can be modelled or simulated on supercomputers. Fluid dynamics, astro-physics and seismic processing are but some examples of such research.

Other universities and industry across Canada will obtain access to HPC’s computing centre soon. Canada is about to be spanned with fibre optic communications links under the CANARIE project. CANARIE will provide coast-to-coast high-speed broad-band communications in a manner akin to the networks already in place and being developed in the United States. Fibre optic transmission lines provide bandwidth and data transmission rates which allow users’ facilities at any location to communicate directly with the supercomputer at supercomputer speeds.

Calgary’s downtown core has a basic fibre optic network already in place, a legacy of the 1988 Winter Olympics, providing it with a head start on the Infoport initiative. Infoport will make Calgary a hub for data transfers and, with CANARIE, will enable Calgary industry, Alberta Universities, and other world centres to access the HPC computer centre directly, as though they were at the supercomputer console.

Security on multi-user systems is an important aspect. Each user on this system receives a User Access Card, itself a small computer which generates a new random lengthy password every six seconds. A sim?lar computer within the HPC Centre is synchronized to the Passcard. The numbers must match at all times for any entry, making it extremely unlikely that a computer hacker could gain unauthorized entry. Additional password protection is also used to provide additional depth of security.

The HPC supercomputer is physically housed in the Secure Facility of SHL Systemhouse, one of the finest and most physically secure computer operations facilities in this part of the world, and accessed only through the high-speed Communications Network.

SEISMIC APPLICATIONS

There are few applications better suited to the Vector architecture of Calgary’s supercomputer than petroleum exploration and development computing. Reservoir modelling and seismic data processing, particularly 3D surveys, are ideally suited to this machine.

Seismic data processing is unique in the world of data processing. Other than the advanced research applications known as The Grand Challenges, it is one of a few commercial applications able to tax the capabilities of the very largest machines. It is also one of the few applications which combine very large data sets with intense number crunching. Most data processing applications feature one or the other but rarely both.

It is not surprising to learn that nearly all major seismic program routines and products were originally developed on the supercomputer of the day. ,Seismic research programmers have always been able to use all the available compute-power, and have always thirsted for more power and memory to develop newer and better process routines.

Consider 3D seismic surveying. The fundamental technique was developed several years ago, and the potential benefits of the method were demonstrated very early. Cost was an impediment to widespread use, but the benefits could easily be shown to far outweigh the cost. In fact, the major factor limiting early general adoption was process turnaround time. Few companies, and few prospects, could stand to wait a year or more to see the results of a marine 3D survey that took only a month or so to shoot.

Invariably, supercomputers were required to process early 3D surveys within any reasonable time period, and they are still by far the best machine for the purpose and major international contractors depend on supercomputers for their main processing centres. Seismic data processing continues to be one of the most compute? intensive regular commercial production application routines in the world.

The most advanced software continues to be at the supercomputer level, simply because it is the only practical device available today able to handle the immense tasks of the newest developments, such as pre-stack 3D migration and 3-component geophone recording, at reasonable throughput rates.

In spite of their initial cost supercomputers can also be the most cost effective machine for large processing jobs. Supercomputers are vector machines, which represent an intermediate stage of development toward the next generation of computer architecture, the multiple parallel processors, by having the ability to execute many instructions simultaneously. Many seismic processing routines are extremely well adapted to vector operations. The HPC Fujitsu VXP-240 is well designed to handle the long vectors common to most seismic data sets. The Fujitsu VXP series is rapidly becoming the hardware of choice, with two large contractors recently announcing major installations.

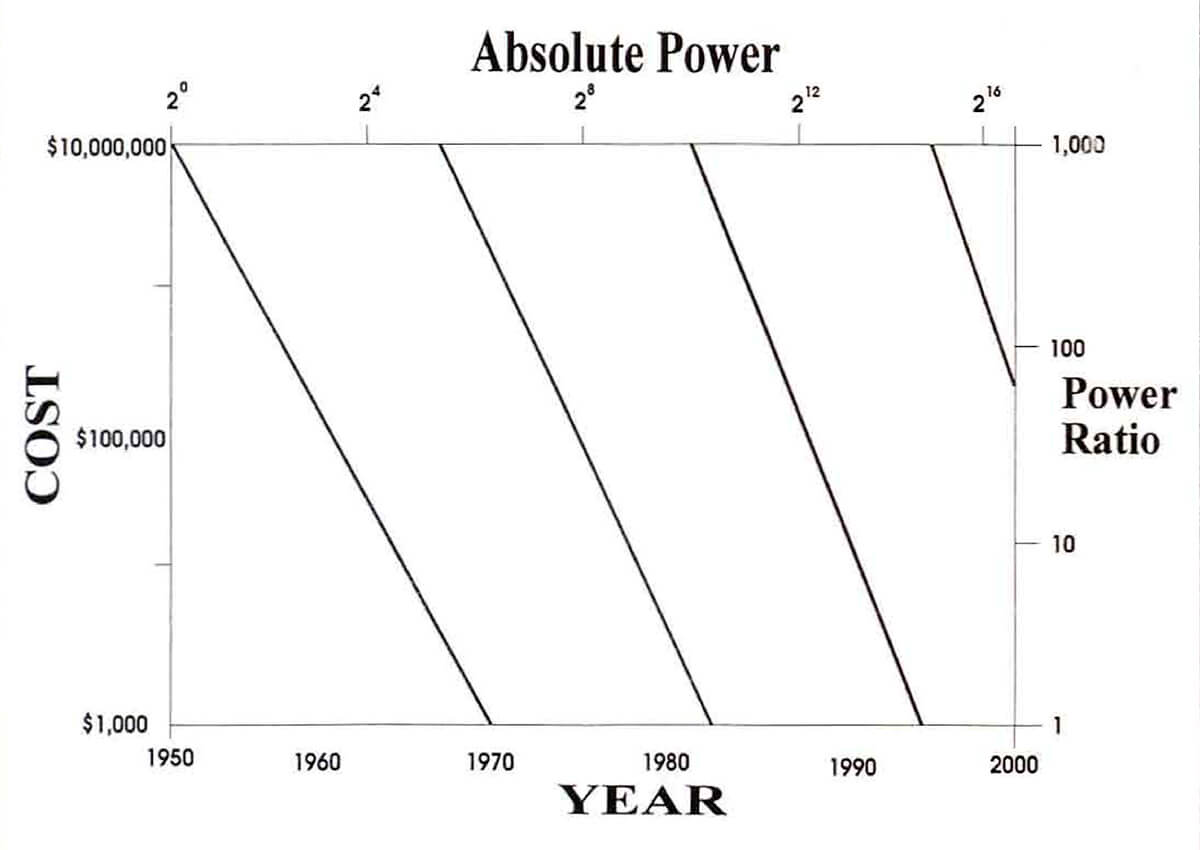

Nevertheless, as the cost/power ratio moves down over time, advanced routines developed on supercomputers can be and are adapted to lesser machines, confirming the typical pattern of normal software/hardware evolution in the geophysical industry. Historically, the price range of computers has remained relatively flat over time, with supercomputers costing about $10 million for power at least 1,000 times that of desktop machines starting at about $1,000. Processor speed has increased geometrically, currently doubling about every 18 months. Thus, over time, a given measure of power slides down the cost scale and, since 10 (3) is about equal to the power ratio from large to small, and 10 (4) the cost ratio, it can be predicted that super computer power of any given era will be available in a desktop machine about 15 years later, at a 10:1 improvement in cost (see diagram).

These are the cost/performance figures that drive the trend to downsizing and conversion from mainframes to workstations, yet the facts of the matter are not quite that simple. For many things in life, direct scaling factors simply do not apply. For example, for the cost of a single Boeing 747 airplane, one could buy a whole fleet of fast corporate jets, but the two types are evidently not interchangeable in service.

The HPC computer has perhaps 25 times the power of the fastest workstation locally available, and 10 to 100 times that of the nominally available computer systems. Thus, a computing job that would normally take a month to process can be done in a day. Many batch processes can be made interactive, allowing the user to “tune” the process, varying critical parameters in an iterative mode to find the best combination for the data set.

Development of seismic processing has proceeded in waves of technology, each wave characterized by some blockbuster advance, such as migration or 3D imaging, that delivered a new higher order of resolution, and new or better information from the subsurface. Searching for the next wave is the current task of some of our best researchers, as well as refining long established routines to make them run faster and cheaper.

Meanwhile, and it bears repeating, today’s mainframe or workstation software is yesterday’s supercomputer software. What was once an astonishingly effective routine worth premium prices and available only from the most advanced developers is today a commodity process, which every processor has (and must have in order to remain competitive).

Thus, there are three major advantages to applying all of the power possibly available for seismic processing:

-

Supercomputing is an enabling technology that allows the earliest possible development and introduction of new blockbuster seismic processes.

-

Supercomputing, on a shared system with power on demand, frees capital from investment in hardware to permit investment in new software techniques which can provide competitive advantages,

-

Dramatic reduction in turnaround time is generally associated with reduced costs, through reduced overhead and obtaining better information sooner. More importantly it allows the star professional performer to interpret more plays in the available time, and to test more geological models and concepts for the data set. In summary, time is of the essence in any business. Reduced turnaround or production time is the greatest single key to winning in today’s competitive market.

Join the Conversation

Interested in starting, or contributing to a conversation about an article or issue of the RECORDER? Join our CSEG LinkedIn Group.

Share This Article