Surprisingly, the first time I was exposed to the basic concept of Machine Learning was at one of my undergraduate course called, “Philosophy of mind”. That was the first time, I was learning about how our brain functioning and how it could be biased. The year after, I took General Relativity course and I found we need to work on the space with more than 3 dimensions to solve complicated mathematical equations. These two moments of my life, made me engaged with the fact that our brain is not capable of processing all types of data and even could be biased or make mistakes in processing information that it receives. At the time, I was motivated to research on how this shortage can be compromised. With a few days research, I found that computer scientist contributed by creating smart algorithm to help us processing efficiently and these algorithms are growing fast.

It used to be that if you want to get a computer to do something new, you had to program it. Now programming requires laying out excruciating details about every step to achieve your goal. But if you want to do something you don’t know how to do it yourself, then it will be a great challenge. This was the challenge faced by Arthur Samuel in 1956. He was trying to make computer beat him at checkers. He had the computer play against itself thousands of times and learn how to play checkers. Indeed it worked, and by 1962, this computer had beaten the Connecticut state champion. He was the father of Machine Learning. He taught us when we lack in knowledge, we make up for in data.

But machine learning is not just a database problem; it is also a part of artificial intelligence. To be intelligent, a system that is in a changing environment should have the ability to learn. If the system can learn and adapt to such changes, the system designer need not foresee and provide solutions for all possible situations.

Machine learning uses the theory of statistics in building mathematical models, because the core task is making inference from a sample. The role of computer science is: First, in training, we need efficient algorithms to solve the optimization problem, as well as to store and process the massive amount of data we generally have. Second, once a model is learned, its representation and algorithmic solution for inference needs to be efficient as well. In certain applications, the efficiency of the learning or inference algorithm, namely, its space and time complexity may be as important as its predictive accuracy.

Perhaps the next big success of Machine Learning was Google’s search engine algorithm, PageRank, which showed us it’s possible to find information by measuring the importance of website pages. Since then, we have seen many other commercial successes by Amazon, Netflix, and others. One of the great examples could be LinkedIn, when it suggests adding someone to your connection list, and you have no idea how it did it. These are algorithms that have learned how to do this from data rather than being explicitly programmed. This is also the reason that we are seeing the first self-driving cars. It’s not straightforward programming that will distinguish between a pedestrian and a tree. But Machine Learning can do that. The Google self-driving car has driven over a million miles on regular roads without an accident. So we now know that computers can learn and do things that we don’t know how to do. One of the extraordinary algorithms in ML, inspired by how the human brain works, is called deep learning. Theoretically, there are no limitations to what it can do. The more data you give it and the more computation time you give it, the better it gets. One of the most famous results of this algorithm is its comprehension of spoken language. Richard Rashid, during a Machine Learning conference in China, showed a computer that can speak Chinese. The computer was given information from many Chinese speakers, then used a text-to-speech system to convert Chinese text to Chinese language, and finally used the voice of Richard Rashid to modulate and talk. Since then, a lot has happened. In 2012, Google announced that they have an algorithm that watches YouTube videos and independently learns about concepts such as cats! So then, computers now can listen, read, see, understand, and even write as Stanford University showed.

Putting all of these together, it can lead us to great opportunities in different areas that can generate new insightful science. A different group from Stanford announced that upon looking at body tissues under magnification, they have developed a machine-learning- based system which in fact is better than a human pathologist. In this case, specifically, computers even discovered that the cells around the cancer are as important to making a diagnosis as the cancer cells themselves. This was the opposite of what human pathologists had thought for decades. Now, here is the question, how many of those opposite ideas we might have in geoscience that could be revised using ML algorithms?

The next question arise here is, do we need a combination of domain experts and machine learning experts? At the rate that computers are currently improving, it is difficult to answer. Most of the Machine Learning experts say that we are going to be beyond that soon. There are many studies in medicine that have been run by people who have no background in the field and their results were accurate. All of these studies have been using Big Data techniques to develop meaningful results. However, there is sometimes a subtle quality control check that human experts need to perform. Here is an example. Assume that we have a two-dimensional data set in which first dimension is the number of car accidents and the second dimension is the numbers of cars which carry umbrellas. When we use a very simple ML algorithm, it shows us that those two data series correlate with each other. However, the reason that those two values correlate is that those accidents happened on a rainy day and that is why people have had their umbrellas in their cars. Therefore, if we had provided computers with the third dimension – in this case, the dates of rainy days – it might have shown us that rainy days was also correlated and intuitively a better candidate for a cause than the umbrellas. Yet, not all of those data are accessible sometimes. In other words, if we have a very large data set, and they are unlabeled, then we can automatically identify areas of structure in data perfectly. Still, we need to work with computers on the areas of interest in which we want the computer to simply learn from try and errors and improve its algorithm. Once we simply accept the fact that our brain is not functional in more than three dimensions, then deep learning can help us in 16000-dimensional space, to rotate through that space, trying to find a new areas of structure and new clusters. Then the human who is driving it can point out the areas of interests manually, and gradually tell the computer more hints so it can be less confused and find the way of thinking itself through iterations.

There are tons of talks about how computers can get smarter and how human should adjust to the new reality; that is not the topic here, but what I really want to touch on is that we need to use these new smart algorithms in geoscience to help the oil and gas industry stay alive. This is a very serious revolution that never settles down and will disrupt us if we keep being dismissive. The better the computers get at intellectual activities, the more they can build better computers. This changes the previous understanding of what is possible and machines grows in capabilities exponentially. Therefore, it’s better to start thinking about how to adjust our industry structure to be aware of this new reality.

In this issue we tried to gather examples of great work in more detail from geoscientists who are exploring this frontier and are practicing computational geoscience with machine learning algorithms. To gain more insight into the types and uses of machine learning, here is a summary of what you will read in this issue..

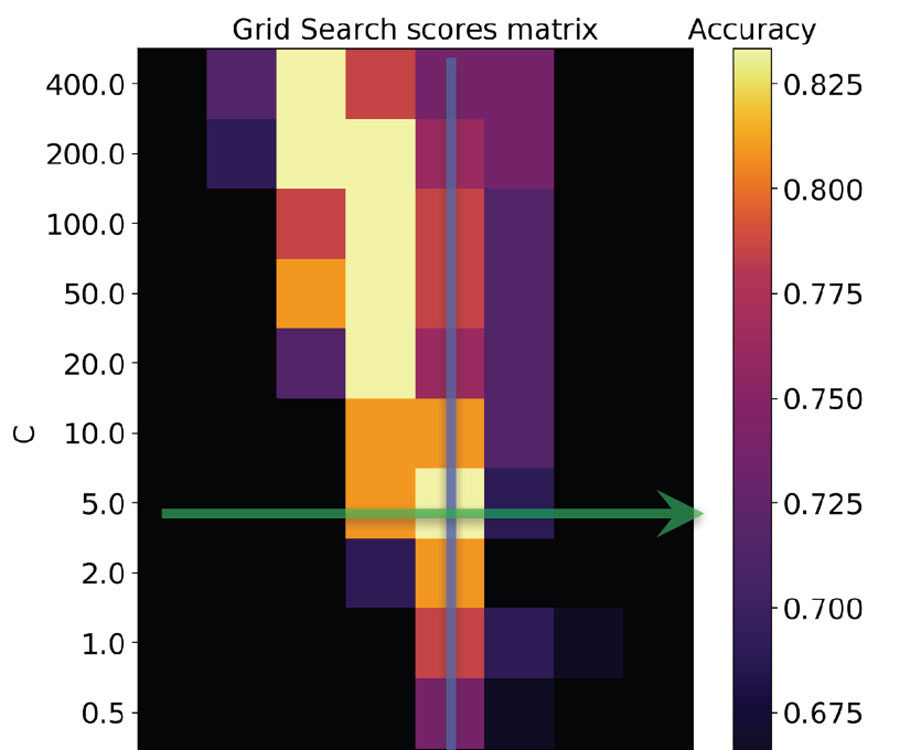

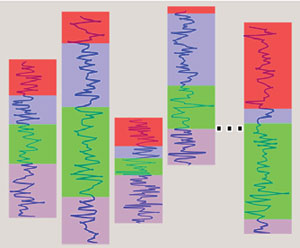

Mark Dahl (A Gentle Introduction to Machine Learning), provides an introductory guide to different ML methods, which is a great article to read first so that you have the big picture in your mind while reading other articles. Matteo Niccoli (Machine Learning in Geoscience V: Introduction to Classification with SVMs) provides a fantastic tutorial article on classification using the support vector machine (SVM), which is a simple and effective supervised learning method. From this article, you will gain an understanding of how this algorithm works and can be useful in geoscience. Brendon Hall (Geochemical Facies Analysis Using Unsupervised Machine Learning) demonstrates dimensionality reduction and clustering of geochemical measurements in his tutorial-style article using unsupervised machine learning methods. Unsupervised learning provides subsurface workers with powerful techniques for interpretation. The next article (Denoising Seismic Records with Image Translation Networks), by Graham Ganssle, is a great example of seismic processing with machine learning. Graham’s image-to-image translation is an application of a deep learning algorithm called the deep convolutional generative adversarial network (DCGAN). This method not only can translate an input image into a corresponding output image to denoising, but can also learn a loss function to be trained on removing multiples and perform migration. Finally, we have Fred Hilterman and Leila NAILI DOUAOUDA’s article telling us about the particular kind of data we have in geophysics, which is short term internal multiples.

We encourage you to read their articles, play around with the code they have shared on the CSEG GitHub site, and follow them in this exciting new direction.

Join the Conversation

Interested in starting, or contributing to a conversation about an article or issue of the RECORDER? Join our CSEG LinkedIn Group.

Share This Article