Duncan Emsley graduated with a BSc from the University of Durham in 1984. He worked for processing contractors for several years before joining Phillips Petroleum in 1992. Continuing in the seismic processing vein, he worked with data from all sectors of the North Sea and northeast Atlantic. The merger of ConocoPhillips brought about a move to Aberdeen, Scotland with assignments in Alaska and Calgary. The last few years has seen a progression into rock physics and seismic attributes and their uses in the interpretation process.

As computer technology has advanced, so has the complexity of our signal processing, noise attenuation, travel-time correction, and migration. So before embarking on any interpretation or analysis project involving seismic data, it is important to assess the quality and thoroughness of the processing flow lying behind the stack volumes, angle stacks, gathers or whatever our starting point is.

At the outset of 3D seismic surveying – and my career – about 25 years ago, computers were small. Migrations would be performed first in the inline direction in small swaths, ordered into crossline ‘miniswaths’, then miniswaths were stuck together, migrated in the crossline direction, then sorted back into the inline direction for post-migration processing. The whole process would take maybe two to three months — and that was just for post-stack migration. As computers evolved so did the migration flow, through partial pre-stack depth migrations in the days of DMO (dip moveout), then to our first full pre-stack time migrations. These days, complex pre-stack time or depth migrations can be run in a few days or hours and we can have migrated gathers available with a whole suite of enhancement tools applied in the time it took to just do the ‘simple’ migration part.

So when starting a new interpretation project, a first question might be: what is the vintage of the dataset or reprocessing that we are using? Anything more than a few years old might well be compromised by a limited processing flow, to say nothing of the acquisition parameters that might have been used. Recorded fold and offset have also advanced hugely with technology.

For simple post-stack migrated datasets, the scope of any interpretation might be limited to picking a set of time horizons. Simple processing flows like this often involve automatic gain control (usually just called AGC), which will remove any true amplitude information from the interpretation — the brightest bright spots might survive but more subtle relationships will be gone. Quantitative interpretation of any kind is risky.

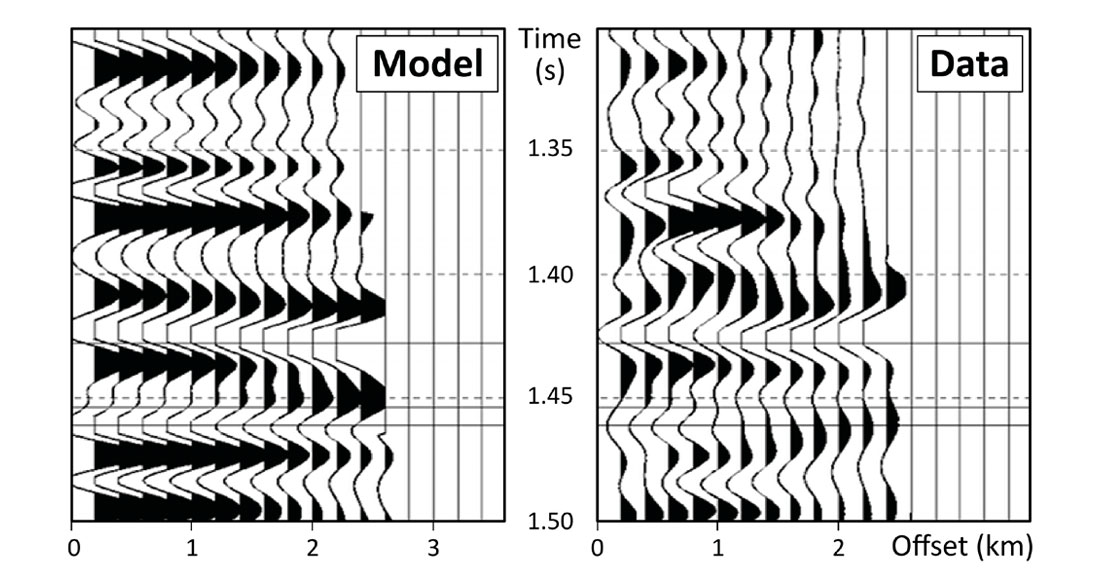

In the pre-stack analysis world, optimally flattened gathers are critical. The flattening of gathers is a highly involved processing flow in itself, often involving several iterations of migration velocity model building, a full pre-stack time migration, and repicking of imaging, focusing, and stacking velocities. What happens in the event of class 1 or 2p AVO? Both involve phase reversal with offset and might require the picking of a semblance minimum in our velocity analysis. Is anisotropy present? How shall we correct for that? If anisotropy is present, we can build a simplified eta field into the migration, but is that too simple? We should scan for eta at the same time as we correct for NMO (normal moveout), but that is tricky. Eta is often picked after the NMO correction — but is that correct? Have we over-flattened our gathers in the NMO process and can’t get to the true value of eta (or truly flat gathers)? Do our gathers even want to be flat? AVO effects can play tricks on us as illustrated by the phase change here:

Signal processing, especially noise and multiple attenuation, have as many, if not more, questions involved with them. Even our friend deconvolution can damage an AVO response if it is applied traceby- trace. Multiples are a persistent problem because they are often difficult to remove without damaging our signal, and can sometimes only become apparent way down the analysis or inversion route.

So a knowledge of the processing flow is a vital part of any interpretation exercise. It sets expectations as to what can be achieved. Ideally, the interpreter can get involved in the processing exercise and become familiar with the limitations in the seismic dataset, or some of the pitfalls. Failing that, a detailed processing report might be available which can help to answer some of these questions. If none of this direct or indirect knowledge is to be found, then perhaps reprocessing is the only option.

Q&A

You make an important point in that before beginning interpretation of seismic data, it is important to assess its quality and be aware of the processing flows. Though this seems to be the right thing to do, how much of it is being done in our industry?

I would hope that this is becoming more wide-spread. Certainly, in my career, the complexity of the processing flow and the desire to preserve amplitude has grown hugely and, with it, the awareness of what we can do with such datasets from a quantitative point of view. With this awareness, it is fairly straightforward to derive an interpretation flow that is limited to what the data should have preserved. When consulted on a new QI project, my first step is to model the expectations and assess the existing data to see what is achievable. I have been with the same company for over 20 years and can only really comment on what I have observed here and through partnerships, but I think that most companies that we deal with are pretty familiar with seismic ‘horses-for-courses’.

Seismic data that is processed a few years ago should be processed with the latest or the state-of-the-art processing flows. Please elaborate on the merits of doing so.

Processing flows are evolving all the time with the power of computers and the development of new ideas. Taking migration as an example, 10 or 12 years ago Kirchhoff pre-stack time migration was at the forefront of technology for the majority of processing jobs. Of late, there has been much more of a move to imaging in depth even for relatively simple geology, given the better handling of lateral velocity variations. In the marine case, demultiple and noise attenuation technology is evolving all the time, so an apparently minor change in the processing flow can make a big difference. The appropriateness of reprocessing an old dataset should also include an analysis of the acquisition parameters as no amount of reprocessing can account for a limited offset range or inappropriate azimuth.

You touch upon the flattening of seismic gathers. This is particularly true for AVO analysis or simultaneous impedance inversion. What all do you think can be done to ensure this is achieved properly?

I think this depends on the scope of the project being handled. In the exploration setting, where surveys tend to be larger scale, it is often difficult to QC absolutely everything and we may not know when picking problems might occur due to AVO and phase changes. Consistency is the key – in picking and checking – and in 3D we can use the power of sampling to help us spot where these issues might occur. In a development scenario, our surveys tend to be smaller, we have more wells to develop our expectations on a local scale and there is arguably more hanging on our getting it right. So it is worth putting more effort in to modelling to see what we expect and QCing our results.

Correction for anisotropy has become important especially in the context of shale resource characterization. This is being done commonly by applying eta correction to the flattened gathers so that the hockey stick effects on reflection events are corrected. Could you elaborate on this?

In the shale resource play, we are often looking for quite subtle signals to tease some beneficial aspect of geology out of our seismic data. These may be velocity variation with azimuth (HTI) for fractures or small amplitude changes that relate to brittleness and fracture potential. To extract these signals requires the gathers to be corrected as well as possible over as much offset as possible, so removing the effect of overlying anisotropy is key. Similarly, for the HTI case, it is difficult to pick azimuthal variations with sufficient accuracy if a strong VTI effect is imprinted on the gathers.

How would you decide if anisotropy has an effect on the reflection events, or it is due to the inadequacy of the 2-term equation used for NMO correction whereas the data needs a higher-order correction?

Through modelling and observation of the recorded seismic response. The Rock Physics tools we have available allow us to build in anisotropic information (for VTI, at least) and we can model the response with and without this parameter. Comparison with the recorded data should allow some recommendation to be made as to what is necessary. Well ties and, if you are lucky enough, walkaway VSP’s should allow us to analyse the need for anisotropy correction too.

You also allude to the menace of multiples and rightly so. Some kind of multiple attenuation is possible when the moveout between the primaries and multiple events is appreciable. When this difference is small, multiple attenuation may be risky. Could you please elaborate on this?

The problem with multiples tends to occur at the shorter offsets, where the primary and multiple energy tend towards parallel. Demultiple methods require some modelling of the expected multiples and a subtraction to remove them. If the demultiple is too aggressive then the near offset primary can easily be damaged and zero offset amplitude information for AVO or Inversion will be incorrect. In the marine world, this can be relatively easily handled by applying an inner trace mute. For land seismic, the near traces are often poorly sampled which makes the problem harder to observe in the first place and then remove.

Let us turn to migration. A lot of progress has been made in the types of migration algorithms that can preserve amplitudes in the presence of steep dips. Except for offshore salt structures, what kind of practical migration is commonly being used in our industry?

In my current environment of the North Sea, Kirchhoff migration is still pretty much the workhorse but, these days, in a pre-stack depth migration workflow. This approach has the advantage that it is relatively straightforward (and cheap) to output pre-stack gathers and, hence, angle stacks. Reverse Time Migration (RTM) is often performed as a final full stack option and does give an improved structural image compared to Kirchhoff. It is, however, pretty computer intensive and is not suited to velocity or anisotropic model building. Beam migrations offer the multi-arrival benefits of RTM imaging but should allow a more affordable option for iterative model building.

What in your opinion is the role played by seismic modeling during the processing of the data? Do you think it helps? If yes, please elaborate.

Forward Modelling has an important role to play in the processing of data, subject to two constraints: the model is only as good as the input log suite allows and the seismic will always be noisier than the model. Shear logs are often missing or incomplete and we need these to work with anything other than zero offset, so we have to enter into the modelling with some caution. However, models are often a good guide for offset requirements and mutes, gain correction, expected AVO response and the pitfall of phase change with offset affecting velocity analysis. It can definitely help with assessing what products are required – whether the acquired seismic is up to having offset or angle stacks generated or if a new acquisition is required. Ray trace modelling, although not necessarily the mechanism by which energy propagates, can help in identifying complicated travel paths and helps to visual the multiple arrivals that might be present in the seismic.

On a different note, Duncan, what kind of people make good seismic processors. A simple answer could be, people who fall in love with geoscience are persistent and often do good work. An argument contrary to this would be, people who have spent umpteen years doing the same kind of processing work, lose interest and are not motivated enough to do a good job. What is your take on this?

In a similar way to how good mechanical engineers need to take things apart to see how they work, a good processor should have an interest in how things work in the seismic world – how the seismic might be flawed and how the processing tools work to put that right. If you are the sort of person that thinks velocity analysis is dull then that can be tricky – I have always been fascinated by the repeating patterns of a semblance analysis, how and why they changed from one location to the next and building up a picture of the geology related to that.

It is easy to get a bit stale over time and I have moved on to a more Rock Physics related role. However, the background to the gathers that I am working with and how the processing might have helped or hindered them is key to understanding what you can get out of them.

Share This Interview