Chris Kent is from the UK and graduated from Camborne School of Mines. He has 20 years experience working in various places in Asia, West Africa, Saudi Arabia, North America, and Europe. He currently works as a geophysicist at KUFPEC in Stavanger, Norway.

With sequence stratigraphic concepts and a modicum of understanding of the regional tectonic history, your 3D seismic cube can begin revealing its secrets. Before we delve into this further, it’s important to remember that by far the most gracious method of understanding what comes out of the data, is to look at the world around us. All the depositional environments that have ever existed still exist today; as expounded by Charles Lyell in the early 1830s, the present is the key to the past.

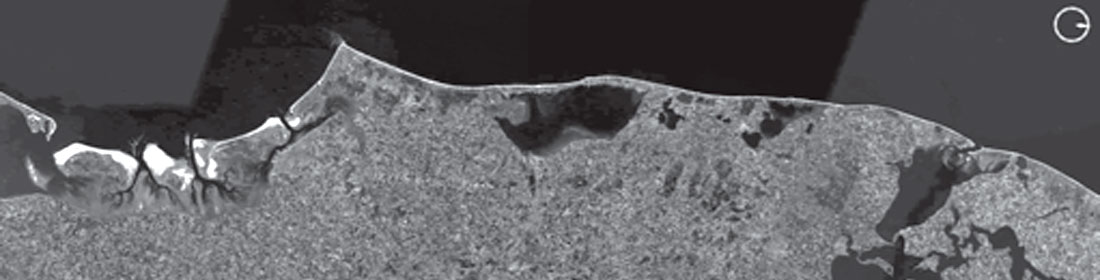

In this sense, imagination is more important than knowledge (a quote from Einstein), and for those geophysicists who do not spend enough time in the real world, perpetually locked in the o ce, you must venture into Google Earth for a cut-price eld trip. If you want to see some uvio-glacial outwash channels, just go to Alaska. If you want to go see a nearshore bar, the west coast of Denmark is awesome:

Don’t forget nature, don’t forget scale, and keep it real. Nature has given you a cheat sheet to the subsurface exam! With this in mind, you’re now armed with a dangerous skill set. Once you’ve got some sequence boundaries mapped out, you can start the detective work. Thickness variation and seismic truncations, once you have accounted for structural elements, will guide you to deductions about relative sea-level. Push the seismic data to its limits; forget about specific attributes, just run as many as you can, because you may see something interesting, and even the absence of something interesting is information in itself! Give your data an MRI scan: run a spectral decomposition tool which can unlock hidden information. Target your analysis and try not to cut across too many onsets, you need to be parallel to reflections as much as possible to visualize bedform patterns on palaeoslopes.

Remember to use unfiltered, high-fidelity data where possible, and be realistic when it comes to your stratigraphy. For example, if you have a hard chalk se-quence above your target zone, you can kiss some of the high frequency content goodbye. If you can, co-rendering attributes in a volumetric visualization will give a big advantage. Immerse yourself into the geology that shape-shifts the world around us. When-ever you are on a beach, by a river, or flying 10 000 metres above the ground, you can gain increased understanding of the subsurface. It’s so easy to lose sight of what you’re working on in the office and field trips may be infrequent. So, take five, and Google away.

‘Don’t forget nature, don’t forget scale and keep it real’. This is a very important message you convey in your article. Could you please elaborate on this?

The forces acting at or near the surface of the earth operate within physical bounds related to the dynamics of the fluid environment. This specific point pertains to what we see around us every day, from the size/geometry of rivers, to wave ripples, to the height of a sand dune - even mountain heights are restricted by fundamental forces. Olympus Mons on Mars is 15 miles high, because the gravity is 2.7 times less than on the Earth. So, we have bounds to operate within and statistics from field measurements to help us reduce uncertainty alongside stochastic modelling. Of course, there are some things we cannot directly measure. My thoughts drift to a sand storm I experienced whilst in Saudi Arabia, a mighty cloud of sand/dust many 100’s of metres tall. While the deposit it left was on a cm thickness scale, one could see the effect the topography had on sand accumulation, and I always think of this as an analogy to turbidites (flume tank videos on youtube are great fun!).

The three well-known principles of stratigraphy are superposition, continuity and original horizontality. In the light of this, could you briefly explain how interpreters perform stratigraphic interpretation on seismic data?

There is the proverbial joke about geophysicists and coloured pencils. Let’s not beat about the bush here, I see that printing out wiggle-skip seismic lines on wallpaper size sheets and sticking them on the wall (or rolling them out on the floor) is something of a lost practice. We have lost something by staring at the computer screens! Establishing a chronostratigraphic framework/diagram is a fundamental requirement of any seismic investigation; the identification of systems tracts and flooding surfaces. Seismic reflectors approximate ‘time-lines’ and the mapping of reflectors and terminations informs us about depositional successions and their spatial/temporal separation. Deciphering the depositional filling history of a basin begins with the question of scale and aspect ratios of characteristic seismic sequences that share commonality. Abandon the paperless office, fill the ink cartridges and get plotting. The geometry/extent/stacking of reflectors and most specifically the position/classification of those reflector terminations go towards making stratigraphic associations (driven by eustasy, subsidence, sediment supply, etc.) and therefore genetic sequences. The distribution of those sequences infers as to the possible existence of play components.

‘Forget about specific attributes, just run as many as you can’. This is in direct contradiction to those who say ‘run appropriate attributes that would address your objective(s)’. For example for gauging information on lithology from seismic data, run impedance inversion; for fault / fracture determination, run coherence/curvature. Could you please clarify this and support that with examples?

Generating attributes and (even) more data is not a problem these days, the question is having the time to scan though it all. Of course, some attribute techniques may not make mathematical sense /be applicable, and many amplitude attributes are going to tell you the same story, so you do have to be somewhat selective. I do absolutely advocate the workflows you mention above because they statistically deliver our best estimate of the subsurface; however, I feel that many of us fall into routines that can be somewhat restrictive. As an example, I have used phase cubes derived via spectral decomposition as an input into coherency/facies-waveform identification techniques. Phase is a good indicator of lateral changes in reservoir properties and can even be distorted due to the effects of thin beds. Since phase will also pick up discontinuities it may serve well as an input into a semblance/ coherency algorithm.

You mention a very important point in ‘remember to use unfiltered, high-fidelity data where possible, and be realistic when it comes to stratigraphy’. Could you tell our readers, why use unfiltered data when the filtered data can show the reflections nice and clear?

My specific comment you mention above related purely to post-stack filtering techniques that affect the seismic response dramatically (such as AGC) as an input to spectral techniques. With respect to fidelity, it is especially important that interpreters understand the limiting effects of scaling/clipping techniques down from 32bit data. Filtering is ultimately necessary, and it is important to cross-check what effects processing or filtering has had. Band-limited frequency filters are very useful during processing to evaluate changes in the processing sequence. In the pre-stack domain, more and more software/workflows are available to the interpreter to QC/improve gathers, reduce noise, evaluate mutes and help improve AVA response.

In many areas when a hard formation overlies another not-so-hard target formation, the frequency content below is reduced. What can possibly be done to aid the stratigraphic interpretation in the target interval?

Ultimately this issue should be addressed during acquisition design. I have recently looked at some 2D data from 2012, which was inferior in quality to data acquired in the same area in the 1990’s, and this was due to the nature of the hard seabed and difference in seismic source size. Broadband acquisition and optimal processing for the specific interval should deliver optimal results, but for older vintages, bandwidth enhancement/spectral blueing/whitening techniques may give a potential uplift (especially if good well data is available to typify the section). Simple end-user attempts to derive more stratigraphic information could include: 1st or 2nd amplitude derivative; frequency filtering; use of phase; analysis of wavelet shape; interval statistics. Full Waveform Inversion (FWI) is also becoming popular to increase resolution in conjunction with overburden issues such as shallow channel anomalies, gas clouds and effects from carbonates.

The other example is when coals overlay sandstone formations. The confounding effects of coals are well known. What according to you is the best way of handing the interpretation in such cases?

There’s no substitute for having a reliable inversion model for lithology prediction if you have good well/seismic data to back it up. There are some great books on rock physics and some very easy/intuitive software packages available now to help the end-user understand how changes in lithology (logs) affect the seismic response. Pre-stack AVA analysis methods may be another alternative that may require a little more support. Understanding the nature of the sediments and the environment of deposition (fluvial, marginal marine/estuarine?), in conjunction with spatial seismic attributes should lead to better understanding of coal formation/distribution. Coals are the only true seismic events to flatten on, since we know they are always deposited parallel to palaeoslope. There is always a big picture, so best to start there; focus on thickness maps and try to make a chronostratigraphic framework to figure out depositional trends.

A significant challenge in stratigraphic interpretation is the mapping of thin deep-water turbidite sands as their thickness and lateral character variation is difficult to perceive, due to the limited seismic resolution. One straight-forward way out would be enhancing resolution of the data in an amplitude-friendly way. The other option could be using spectral decomposition, but that restricts examining the data between the bounds of the existing bandwidth. How would you approach such a problem? If frequency enhancement has to be adopted, what method would you recommend?

Many of the ‘older’ 3D surveys I look at don’t have the necessary frequency content to drive mapping of thin sands. When you’re in the field or looking at a core, staring at a 1-2m thick sand lobe, imagining a seismic wavelength as high as a building, reality can be a disappointment. Fortunately for us, the age of broadband acquisition has dawned, and we can get a few extra hertz at both ends. Some acquisition companies now only support broadband acquisition, which is great news for all of us down the chain at the interpretation end. For the older surveys, there are some bandwidth enhancing techniques on the market from most vendors/processing houses, which on the whole can deliver 1.5 – 2.0 octaves uplift (with low frequency ‘blooms’) if they are fortunate to have good logs to tie to. If you are attempting some spectral decomposition in-house first, perhaps try to clean-up your data as much as possible with some amplitude preserving de-noising or structural filters using dip/azimuth cubes. While this method can show very good lateral facies distinctions, window length and analysis window are crucial to capture vertical placement uncertainty. Time thickness maps between turbidite intervals can be of great use since they tend to delineate fairways via compaction trends and hence sand content. While we cannot resolve individual sand lobes, stacked lobes can sometimes deliver a helpful interference signature. Bed parallelism/chaos/curvature attributes may also help define facies, especially mass transport complexes. One last point is on re-processing data: due to the advances in algorithms and compute power, significant uplift in seismic quality is possible, even with old data that had inferior original processing. Some of these options are cheap when compared to their potential impact.

Finally, please tell us the most important thing that you learnt or which influenced you in your professional life?

The most important thing I have learnt is to enjoy what you are doing. I once heard a saying that ‘life is too short to drink bad wine’. I think the same is true for work. So, if something isn’t right, then you need to make a change. Life is about perception, and if you remember that you cannot change other people, only yourself, you end up overcoming obstacles and challenges in a much more self-owned manner. I have been very privileged to have travelled, working with so many talented specialists, and in my failed attempts to join their ranks and become a specialist in ‘something’, I inadvertently became a generalist. This has surprisingly turned out to be very fulfilling. The way we communicate also influences our professional life. Has anyone ever gotten that one-liner e-mail from the person in the next office? It’s much easier to take a break and discuss the small stuff over a coffee.

Share This Interview