I’m sure my friends are getting quite sick of hearing about heuristics – ever since I read “Thinking, fast and slow” (Kahneman, 2011) I just can’t shut up about them. I have been aware of heuristics for some time, but until this book came along I viewed them as an interesting but peripheral aspect of human psychology. I now believe that heuristics determine (and explain) certain fundamental aspects of human behaviour.

Daniel Kahneman (Wikimedia Foundation, Inc., 2012) is one half of the duo (the other half is Amos Tversky, now deceased) that basically created the psychological field of heuristics. They laid the psychology foundation for behavioural economics, and in 2002 Kahneman was awarded the Nobel Memorial Prize in Economic Sciences for his work in prospect theory, which essentially shows that people make decisions involving known risk based on values of the potential gains and losses estimated using heuristics, rather than the actual outcomes that could be logically derived using mathematics and logic.

The online Merriam-Webster dictionary defines heuristic as, “involving or serving as an aid to learning, discovery, or problem-solving by experimental and especially trial-anderror methods; also: of or relating to exploratory problemsolving techniques that utilize self-educating techniques (as the evaluation of feedback) to improve performance.”

Kahneman’s definition does not include Merriam-Webster’s experimental, trial-and-error, and self-educating components. “The technical definition of heuristic is a simple procedure that helps find adequate, though often imperfect, answers to difficult questions. The word comes from the same root as eureka.” (Kahneman, 2011) The two definitions are not necessarily incongruent. As species evolve and morph and split into other species, beneficial heuristics are embedded in their DNA, and this can be viewed as a form of experimental / trial-and-error learning over millions of years that equips any species at any point in time with heuristics that should serve it well. So you can view our human heuristics as evolved rules of thumb that allow us to come up with quick decisions when faced with situations, even tricky ones, based on the successful trial-and-error decisions of our numerous ancestors (and of course the numerous unsuccessful decisions of all the unfortunate individuals who never got the chance to be our ancestors!)

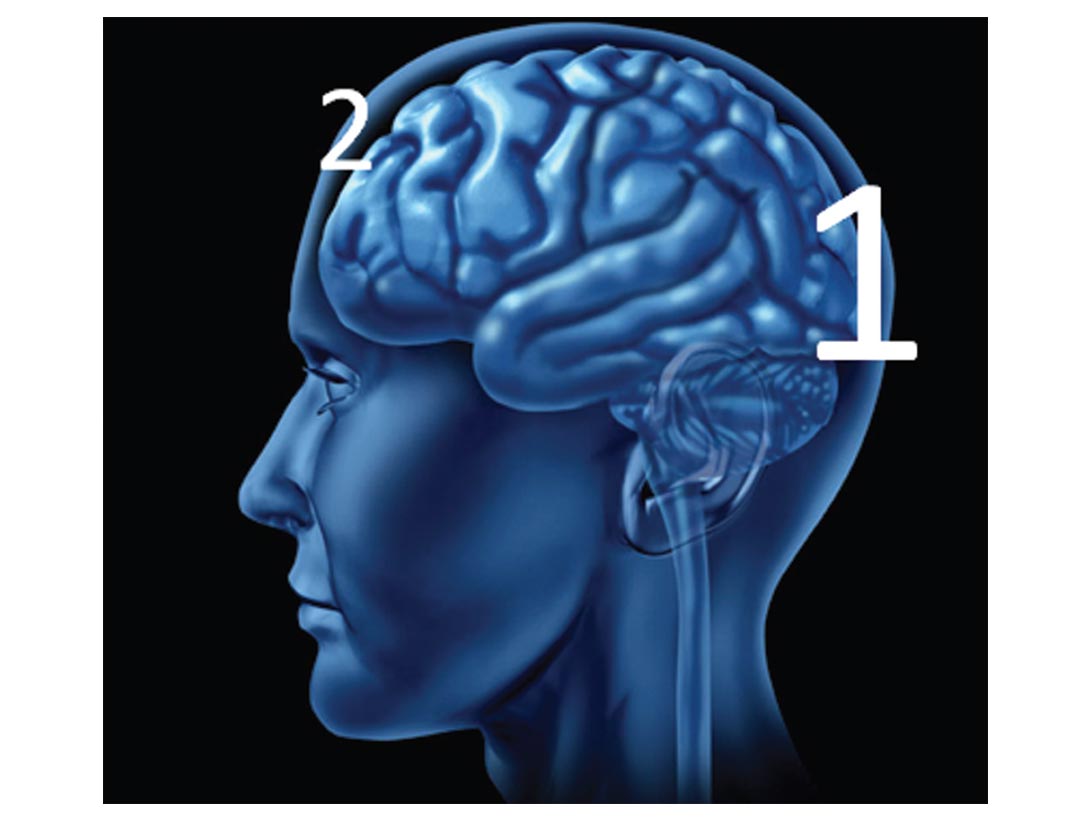

A very simple central model describing human thought processes forms the foundation for theories on heuristics, not a physical model but rather a conceptual one. It is made up of two thinking systems, System 1 (heuristics) and System 2 (rational, logical thought). System 1 is on the job all the time, and has evolved to give us quick answers to questions for which there is usually not enough information to deduce a logical answer from – essentially underdetermined problems, which as geophysicists we are all too familiar with. System 2 is slower, and takes more effort and energy; humans always prefer to get the quick and easy answer from System 1 and only reluctantly engage System 2.

Kahneman, Tversky and many others have over the last few decades systematically identified and defined many specific heuristics, and these are described in an interesting and easy-to-understand way, chapter by chapter in “Thinking, fast and slow”.

Examples of how heuristics can give wrong or inappropriate decisions are very entertaining and they are usually illustrated in this manner. A classic: a bowl of soup and crackers costs $2.20, and a soup on its own costs $2 more than the crackers. How much do the crackers cost? Inevitably people accept the quickly delivered heuristic answer of 20 cents, because they trust it and are cognitively lazy and loathe to engage their System 2. But the correct answer is actually 10 cents ($2.10 + $0.10 = $2.20).

The anchoring heuristic is evident when house shopping. The asking price acts as an anchor, which your heuristic systems use as a starting point – in negotiations you will inevitably be pulled towards the asking price, even if you logically know the asking price is out of whack. The eerie thing is that our heuristics are influenced by the anchoring effects of information not even related to the question at hand! What this means is that if Ben Bernanke (chairman of the US Federal Reserve) is going up the elevator, and glances at the displayed number 25 when he reaches his floor, then an hour or two later when he is setting the federal funds rate, he will tend to be pulled towards a rate of 0.25%. It is hard to believe but this effect has been conclusively proven over and over by a broad range of studies. The anchoring effect doesn’t have to be numerical, it could also be emotional – you may be more likely than normal to recommend a risky well location because of the residual positive / optimistic mood created by an enjoyable coffee break with co-workers earlier in the morning.

It is critical to note that usually System 1 gives us the best possible quick decision under the circumstances, and it is remarkable how robust and reliable it is. But the world today is complex and full of situations that demand more System 2 than System 1 (e.g. trying to figure out your laptop’s new OS, getting from Prague to Krakow without a GPS, or predicting porosity from seismic), certainly more so than during the eons of hunting and gathering that honed our heuristic systems. As geophysicists whose stock in trade is, simply put, System 2 thinking, we should educate ourselves on how our natural inclination towards reliance on System 1 heuristics can compromise our System 2 abilities.

Real life is a complex landscape in a cognitive sense, and our little brains are constantly combining several heuristics at once to make sense of it all. Current public debates concerning the oil and gas industry are perfect examples to demonstrate this. A person will develop an opinion on whether they are for or against oil sands development, or fracking. These are complex issues which would require in-depth analysis of the many pros and cons to develop a truly informed opinion. The very basic substitution heuristic employed in these situations is to subconsciously replace to complex question with an easier one. Instead of using System 2 logic to figure out their stance, a person’s heuristics will instantaneously ask, “How do I feel emotionally about ducks dying in a toxic, black tailings pond?” Bingo, easy answer – “Terrible! I am against oil sands development.”

The affect heuristic helps simplify things by relying on pre-formed opinions – likes, dislikes, political allegiances, tribal connections and all the other sources of bias. The affect heuristic exaggerates the pluses related to things aligned with our biases, and minimises the negatives. So a conservative Albertan employed by the oil industry will tend to magnify the benefits of fracking while discounting the costs, even to the point of being unable to absorb factual data on the matter. Conversely, a person in rural Ontario with a love of the outdoors will do the exact opposite – exaggerate the costs of fracking and minimise the benefits.

A third heuristic that comes into play is availability. In the presence of vague, difficult to understand, or conflicting information, the availability heuristic pushes us to rely heavily on more easily available information, and what that means in practical terms is information that is more recent, has a larger emotional impact, or any other characteristics that will cause it to stick out more in the mental memory banks.

Combine these three heuristics and what can happen is coined an “availability cascade” by Kahneman, and perfectly explains the current oil sands and fracking debates. People go into these issues with pre-formed biases. The affect heuristic causes them to latch onto “agreeable” information and filter out “disagreeable” information. The availability and substitution heuristics cause people to soak up information with more emotional impact and which is perhaps unrelated to actual costs and benefits (stories of cow miscarriages) than that which is low emotional impact and very relevant (say job creation statistics). The media industry obviously relies on the affect and availability heuristics (no one wants to read dull news, and most people are only absorbing information which confirms their already formed opinions), and their pandering to a biased and captive audience acts as a feedback mechanism that stokes the availability heuristic. The more people get worked up over an issue, the more news articles there are, which heightens the availability, which works up people even more, and so on. At this point the issue is a runaway train – attempts by experts to inject System 2 logic to the debate are viewed as evidence of a conspiracy, which further stokes the cascade. The end result is polarised opinions on the issue, with little logical content. Worse, the high profile nature of the debate forces politicians to weigh in, in some case even basing policy on highly unreliable public opinions that are the products of System 1 heuristics.

I suspect that this field will become more and more important as neuropsychology pusghes back to limits of our understanding of the human brain. I’m particularly interested to see if the field sheds light on gender differences. Kahneman remains silent on this (probably wisely), but I’m convinced heuristics can explain many of the differences between male and female thought processes. A former co-worker of mine used to say, “Women have different logic chips,” but I think I disagree. I think males and females deploy the same logic, more or less, and it’s in our heuristics where we really differ. In confusing situations (like being lost between Prague and Krakow) men and women react differently at a very instinctive level, and it is this that we find baffling in the opposite sex.

I hope I have conveyed enough on this topic to motivate readers to pursue the topic further on their own. Close to home, fellow CSEG member Matt Hall has written excellent geosciencefocused articles related to heuristics, and I have included a couple of these in the references. There just isn’t enough space here to do justice to the many heuristics and their interesting effects on how we think. But the bottom line is that as scientists who pride ourselves on our highly developed (System 2) logic, and indeed use it to earn a living, it behooves us to understand this other System 1 which is there day and night not necessarily doing what we think it is!

References

Hall, M (2010). The rational geoscientist. The Leading Edge 29 (5), May 2010, pp. 596- 601.

Hall, M (2012). Do you know what you think you know? CSEG RECORDER 37 (2), February 2012, pp. 30-34.

Kahneman, D. (2011). Thinking, fast and slow. Doubleday Canada.

Wikimedia Foundation, Inc. (2012, October 14). Daniel Kahneman. Retrieved October 26, 2012, from Wikipedia: http://en.wikipedia.org/wiki/Daniel_Kahneman

Share This Column