In this issue of the RECORDER we feature answers to a question on a very important issue – that of Integrated Reservoir Characterisation.

The ‘Experts’ answering these questions are Jeffrey M. Yarus (Quantitative Geosciences, Houston), Joel Walls (Rock Solid Images, Houston), Sanjay Srinivasan (University of Texas at Austin), Serge Nicoletis ( TOTAL, Paris), Hai-Zui Meng (iReservoir.com, Denver), Paul van Riel (Fugro, Netherlands), and Rainer Tonn (EnCana Corp., Calgary).

We thank them for sending in their responses to our questions. The order of the responses given below is the order in which they were received.

Question

Multidisciplinary integrated reservoir characterization combines geology, geophysics, petrophysics, reservoir/production engineering and numerical simulation (i.e. use the borehole and seismic data, both static and dynamic) in an attempt to better understand reservoirs and get improved reservoir management and productivity gains. The ultimate goal of such a process is to predict future reservoir flow behavior in order to optimize reservoir development. Occasionally, we do see an article or presentation describing a case study utilizing an integrated workflow and the supporting tools, and leading to a better description of the reservoir. Of course this description comes with its own challenges.

The question that comes to mind is, if integrated reservoir characterization gets us more accurate reservoir descriptions, why is it not being adopted as a routine, so that we can enhance our drilling success? Oil companies don’t seem to be doing this. What are the issues in this regard and how is it possible to overcome them?

Answer 1

There are at least three points that come to mind. I will address them one at a time.

1. Cost

The value of a fine scale 3D reservoir model is not yet fully appreciated by many managers. We have moved from 2D to 3D with great anxiety and trepidation. While the concept of providing a 3D reservoir description is intuitively pleasing, the implementation is non-trivial. It requires time, expertise, expensive software, and full disclosure of our assumptions, methodologies, and poor data quality. The process takes longer than it used to, fostering a feeling of urgency and a need for short cuts. The results are often disappointing and lead to an unfavorable opinion of the technology and its value.

2. Software

Companies have been burned by uncontrolled purchases of expensive software. Steep learning curves deter their use and result in much of it sitting on shelves. Even if available, software “experts” are in short supply often causing delays. Further, the software has limits, and may not provide all the best solutions. This requires workarounds which can breed frustration.

3. Education

Education of the underlying technology is critical. We have become a sophisticated class of button pushers, but few of us understand the underlying technology. Commercial software products use complicated algorithms, and they are not all equal. Yet, they are often applied based on criteria of availability, speed and ease of use. I have found from teaching hundreds of professionals that the underlying principles are poorly understood. Thus, misapplication through lack of education can yield poor results.

Good modeling is neither fast nor cheap. Sophisticated 3D modeling technology has proven successful in many cases. However, it is unlikely to become the rule until professionals understand what is behind the buttons they push and managers accept proper time frames for its implementation.

Jeffrey M. Yarus

Quantitative Geosciences, Houston

Answer 2

The use of geophysical, petrophysical, engineering, and production data in a truly integrated analysis is a complex and labor intensive process. Our industry has yet to develop the tools (software, workflows, and measures-of-success) that will move integrated reservoir characterization (IRC) into the E&P mainstream. However, considerable progress has been made in this technology over the last several years. Compared to other recent technologies that have become accepted as standard practice in oil exploration and production, IRC is moving ahead about as expected, considering the current energy industry mindset of minimal investment in new R&D (more on that later).

Economics dictate that new technologies are first applied to the highest value fields, and those are controlled by the largest companies. IRC results from these large fields are seldom made public. Fortunately, there are a few technology-oriented small to mid-size oil companies who are jumping into the fray. They are being aided by specialized service companies, software developers, and a small group of university researchers. These new players are moving up the learning curve quickly. By presenting and publishing their work, they will soon create the critical mass of technical expertise and tools to make the process almost as universal as the 3D seismic data on which it relies.

What are the essential compounds in the complex chemistry of IRC?

- High-quality input data

- Fit-for purpose data processing

- Rock physics modeling

- Seismic data “inversion”

- Static earth modeling

- Dynamic reservoir modeling

- Iteration, refinement, testing

- Prediction and confidence estimation

Most larger oil companies are now organized into multi-disciplinary teams, which are essential to IRC. Of course putting geologists, geophysicists, and engineers together in one room doesn’t mean that they can work together effectively. They must be given the correct tools for the job. There is no single IRC software application and if there was, very few people would know how to use it. Instead, successful IRC teams use best-in-class software suites from multiple vendors. Some oil companies have even developed key elements of their IRC software internally.

Once assembled, equipped, and trained, the IRC team must be given one last important element, time. It takes more effort to coax reservoir properties out of 3D seismic than it does to pick faults and horizons. Because staff is always finite (and overworked), many oil companies carefully screen and certify outsource options for some portions of the job such as rock properties modeling, well log and seismic data conditioning, seismic inversion, and reservoir simulation.

What will be needed to make IRC an essential element in a majority of field development projects? Here are a few suggestions.

Quality Data

The most efficient E&P companies have a great appreciation for the value of good data. Successful wells yields hydrocarbons and information. However, when wells fail to produce, many companies also fail to get the information that is crucial to IRC. A dry hole is a terrible thing to waste. Well budgets should include sonic, density, gamma, neutron, and resistivity logs over an extensive depth range. Dipole shear logs, array resistivity logs, check shots and VSP’s should be obtained on exploration and appraisal wells.

Drilling programs should be designed to produce smooth boreholes all along the entire well path, and extend 50+ m below the bottom of the pay zone. Sidewall cores should be obtained routinely, and whole cores should be taken in key wells. Seismic data should be acquired and processed with IRC in mind. This means small bin spacing, high fold, long offsets, and fine time sampling. The processing sequence should preserve amplitude and phase at all offsets. Processing designed to “boost” high frequencies and improve apparent image resolution may destroy valuable information that could be used to understand reservoir properties.

Once high quality data is obtained, don’t lose it. Many companies have no centralized data storage. Instead, each business unit or work group keeps bits and pieces of data in project folders that are seldom archived, easily lost, and not available to others when needed. Each well that is drilled produces an avalanche of data; drilling logs, mud logs, fluid analysis, core analysis, core descriptions, several logging runs, log headers, check shots and VSP’s. This data should be organized, archived, and made easily accessible.

Quality Data Processing

Seismic data is gated, muted, migrated, stacked, deconvolved, and inverted to name a few of the more common operations. Often the intermediate results are not available to the end user. Oil companies can have a major impact on the progress of IRC just by requiring data vendors to document each process and make available the results of each major step in the processing flow. In addition, all processing steps should be designed to preserve pre-stack amplitude and phase over a wide frequency range.

Speed and Robustness

Service companies and oil companies need to work together to make the process of IRC fast, cost effective, and repeatable. This is the single most important issue for our industry to address. It does little good for a team of professionals to refine a field development project if it takes 6 months, but the drilling plan has to be complete in two. A corollary to long turn around time is high cost. IRC will never become mainstream if most projects have a negative net present value!

Most super-major oil companies (at least based on the limited information they reveal in public) have developed objective measures of risk and compiled data on outcomes with and without IRC. These companies have decided the benefits outweigh the costs. Unfortunately, this is less recognized outside the top echelon of the E&P universe.

Research and Development:

The last essential element is for oil companies to encourage and support research in this field. This support should not hinge on quarterly earnings, unless of course the company only plans to be around for a few quarters. Severe cuts in energy research spending in recent years have been well documented and frequently bemoaned. The Wall Street Journal (Aug, 17, 2004) cites the case of ExxonMobil where the total R&D budget of US$600 million is down 18% over the last 6 years. This figure represents 0.3% of ExxonMobil’s 2003 revenue, and they are among the industry’s research leaders. In fact, some would argue that ExxonMobil is the only US oil company still doing E&P research.

By comparison, all-industry R&D spending in Canada in 2002 averaged 4.6% of revenue according to Research Infosource Inc. (July 2003). USA Today reported in Oct, 2001 that “public and private spending on all energy research (US) peaked at more than $12 billion in 1980 and has fallen to less than $4 billion (in 2000).” By contrast, Ford, GM, Lucent, Motorola, Pfizer, IBM, Microsoft, Intel, and Cisco each spend more annually on R&D! Not that there is much the average geoscientist can do about this trend, but we should continue to remind the captains of our industry that if every company wants to be a “fast follower” then no one will be leading.

The support needed by researchers in the field is not just cash, it’s data also. Much independent research is stifled because of tight restrictions placed on publication of images of seismic and well log data. The geoscience data vendors rightfully must protect their investment in these assets, but the entire industry would benefit if a few modern 3D seismic data sets (and associated well logs) were in the public domain.

In spite of the downward spiral of energy R&D funding in the US, there are encouraging signs that our industry is moving ahead in IRC at an ever-increasing pace. If the presentations and publications of the geoscience journals and societies are any indication, interest is very high, even if utilization isn’t. For example, the August issue of the The Leading Edge contains a special section on Reservoir Modeling with six papers covering various aspects of integrating core, log, and seismic data. The number of technical sessions on this topic at annual geophysical conferences has increased markedly over the last 5 years. Perhaps more encouraging is the number of new technical workshops and symposia being planned by the Society of Petroleum Engineers that specifically include seismic methodologies in reservoir management. While the pace of progress could be and probably should be faster, at least its moving forward and with oil and gas prices at all-time highs, we can at least hope for better things to come.

Joel Walls

Rock Solid Images, Houston

Answer 3

Tools and methods for data integration in reservoir models have become more sophisticated and robust over the years, and have attempted to keep pace with the advancements in measurement technologies. Reservoir models that realistically re p resent geological heterogeneity and take into consideration data of different types can now be constructed. Sophisticated geostatistical algorithms that take into account differences in scale and precision of available data, and can be made to conform to prior geological information, have been developed. Other approaches such as those using calibration methods such as neural networks have also become quite popular. Routine reservoir engineering tasks such as history matching are now performed taking care that the prior geological model is preserved. Nevertheless, given the perpetual urgency of reservoir development projects and the demand for increased profitability of reservoir production activities, significant hurdles still persist that prevent widespread use of integrated reservoir modeling techniques. Some of these unresolved issues are identified below:

- Dealing with uncertainty implicit in integrated reservoir models: Reservoirs are complex entities exhibiting variability at a variety of scales. Data available to assess this spatial variability is sparse and so spatial interpolation of the available data is necessary. This implies uncertainty in the reservoir models. Implementing decisions on the basis of integrated reservoir models requires complimentary techniques for decision making in the presence of risk and uncertainty. Evolution of robust methods for incorporating risk and uncertainty in reservoir management is slowly picking up steam with the development of tools that go beyond integrated reservoir modeling and into decision-making integrating uncertainty.

- Improved understanding of physical processes affecting measurements: As mentioned previously reservoir geology exhibits variability at a variety of scales and that affects the responses of tools and measurement devices. Understanding the scale and precision of available data is a key issue and requires a deep understanding of the physical processes in the reservoir. Data integration methods have to robustly account for these differences in scale and precision of different data. Predictions of well and reservoir performance made on the basis of models that do not rigorously take into account these data related issues can be erroneous. On many occasions, bad predictions based on erroneous (or badly conditioned) reservoir models have resulted in disillusionment with integrated reservoir modeling methods and a shift away from such methods.

- Seamless integration of data from all sources: Geology, geophysics, petrophysics, and reservoir/ production engineering data each provide a glimpse of subsurface heterogeneity that influences the flow of fluids. The trend has been for each of these disciplines to assume pre-eminence over the others when it comes to developing the final reservoir model. Over-constraining the reservoir to any one piece of information invariably results in models that have poor predictive capability and conform poorly to data from other sources. Seamless integration of data requires developing a thorough understanding of the inter- relationship between data from different sources and how they relate to the underlying reservoir heterogeneity. Modeling techniques have to be developed that will facilitate integration of data while performing internal calibration to assess the value of each piece of information. Too often, simplicity of data integration approaches is an over riding criteria resulting in implementation of simple, but not robust hypotheses such as conditional independence between data, full independence of data etc.

- Better interactive software tools for data integration: With advancements in computation technology and visualization tools, the science and technology of integrated reservoir modeling has come far. Immersified visualization technology allows users to interactively explore large data sets and target zones and critical reservoir heterogeneity. Nevertheless, software improvements to permit data integration and visualization with additional data is necessary in order for integrated reservoir modeling to be accepted as the norm. Major oil companies have invested significant sums of money in developing sophisticated software, but extending that capability to smaller independents will further the use of reservoir modeling technology.

Sanjay Srinivasan

University of Texas at Austin

Answer 4

The scientific journals are frequently echoing with very successful integrations of the various geoscience tools into a fully detailed reservoir description. But only a fraction of the practical projects seem to be handled that way. The fact is that the integration of the different techniques, and particularly speaking of Seismic, is highly dependant on several factors which combine time, budget, management and technical issues to conclude on a practical way of handling a reservoir model:

Data quality and relevance: Seismic information is not always providing the pertinent information which is really needed in the field description. This can come from the inherent nature of the survey or from the dynamic nature of the main uncertainties. For instance land acquisition will have a lower resolution that marine surveys for the same depth and geological setting, streamer surveys will not deliver azimuthal information, seismic inversion can hardly inform on absolute or relative permeability and so on. Unfortunately some exploration problems are better posed than others and the geophysicist must adapt and optimize his contribution preventing using one single, standard, very time consuming workflow. Even when Seismic provides a very important support to the reservoir characterization, it is still tricky to introduce this band limited, time scaled and relative information in the geomodel either in a deterministic manner or as an external constraint to the geostatistical realizations.

Time frame: An idea widespread among non-geophysicists is that a 3D survey is acquired and processed only once. Unfortunately when it appears that a lot more could be derived from Seismic, then a very long process has to be carried out which may include up to two years of additional works (reprocessing or possibly new acquisition and interpretation). It is very often a timing consideration which impedes the practical integration of high quality seismic information into the geological model, in addition to the classical structural information. Same problem with the updating of a model which could imply another full cycle (6 to 9 months) of seismic works. The only remedy to these constraints is to strongly anticipate the use of seismic information at each step of the project: – detailed pre-acquisition optimization of the seismic lay-out in regard of the targets of the project, – acquisition of P and S sonics, – elaboration of a petroelastic model, – early decision of reprocessing and so on.

Cost and qualification: This type of study generally requires a team with a high level of technical skills and experience. It requires also a solid group of qualified specialists to refer to during the process, which can consolidate the know-how, develop new technologies in reservoir characterization and geomodelling and utilize field analogs. Finally, it is very difficult to subcontract these types of study which are only fruitful when a high degree of integration between disciplines is achieved.

Management and leadership: The last obstacle could come from a limited practice of the integration among geoscientists and a limited perception of the full range of information which can be brought by seismic. When a project has reached the step of reservoir modelling, production profiles and field development plan, its management is frequently controlled by a reservoir engineer or a planning project leader. The role of the geophysicist is to promote the use of geophysics at all steps of the project. This can be facilitated, by a training of the decision makers on the useful tools to be used and their technical scheduling, by a proper organization of the company breaking the walls between disciplines and also by a better understanding by the geophysicist of what is at stake.

The practical experience we have developed internally in Total strongly supports a strong integration of Geophysics in all steps of the reservoir characterization. This is particularly true in deep sea projects, where each well is very expensive (Girassol in Angola or Akpo in Nigeria). But in fact there are few projects which cannot benefit at all from useful geophysical information when properly set.

Serge Nicoletis

TOTAL, Paris

Answer 5

From our perspective the process of integrated 3D characterization is being widely applied and we do see the technology gradually being accepted by the smaller independents. But we do admit that the industry is a long way from receiving maximum value from the technology. The cost benefits of the technology are illustrated by 1) the significant technical literature illustrating the benefits, 2) the willingness of the large and national oil companies to assemble staffs focusing purely on improved reservoir characterization, and 3) the success of integrated geomodeling software like Gocad (Earth Decision Sciences), Petrel (Schlumberger), RMS (Roxar), and others.

Despite all the apparent benefits of the technology, the question remains why is it not being more widely and routinely applied? We see a number of significant issues. First and foremost, the problem is that integrated 3D characterization entails far more than software selection and software mastery. There is no “push button” answer. Consider the tremendous excitement seen when numerical simulators became mainstream in the early 1980's. However, anyone trying to apply them to real problems realized that the results were highly dependent both on the starting geologic model and on the numerous simplifying assumptions in the equations. In other words, the adage “garbage in” equals “garbage out” was widely experienced. Unfortunately, reservoir simulators could never provide a unique answer; they could only show which models were not possible. This limitation led to wide interest in better characterization methodologies. If we could build better starting models, then the fluid flow simulations should provide more unique and believable forecasts. But herein lies the problem: How do we build a more accurate model accounting for the many different measurement scales, while also addressing the uncertainty that results from a sparse data set?

Reservoir models were originally built at the flow simulation scale, consequently history matching the simulator was one and the same as refining the geomodel. Despite the fact that most static 3D geomodels are now built at a very high resolution scale (multimillions of cells) and then up-scaled for simulation, history matching efforts are still typically applied at the simulator scale alone. This tends to be most expedient, but does not always translate to improving the geologic understanding of the reservoir. A multimillion cell static geomodel-literally “gleaming” by means of modern visualization-does not necessarily translate to an accurate reservoir description until it is well calibrated with all available dynamic data. But it is important to remember that both the geomodel product and achieving a history match through simulation are not the whole story; rather, the goal should be to combine information at a number of scales using a multidisciplinary approach in order to understand reservoir flow architecture for the purpose of optimizing future development.

The very process of iterative 3D model-building and simulation engenders multi-disciplinary data integration to a far greater degree than traditional reservoir characterization and can result in greatly improved reservoir understanding. In theory, integrated geomodeling and simulation are the ultimate, most rigorous way for static and dynamic reservoir data to be integrated; yet geomodels are typically up-scaled and history matched way down a “linear workflow” path by simulation engineers who may have limited cognizance of the data assumptions and limitations (and sometimes even of the staff involved…) used for the basic reservoir characterization and geomodeling. To achieve optimal integration, we advocate somewhat more intensive “non-linear” workflows in which early history matching efforts iterate back to static geomodel reconstruction and refinement as opposed to simply performing “fixes” within the simulation scale model. This workflow is not only “parallel” (multiple disciplines working simultaneously), but “non-linear” (connoting the iteration back to static model based on dynamic model insights).

Of course, one major issue is the lack of manpower skilled in interdisciplinary workflow. Within major oil companies highlevel teams have been assembled to ensure 'integrated views' of the company's high-profile fields. These teams have invested man-years in developing their technical skills. In today's fast-paced world calling for on-demand technical solutions, insufficient time is allocated for new team members to learn sufficient cross-disciplinary technical skills. One can learn the terminology in a few weeks, the integration programs in a few months, but the integration experience level will probably take several years. Many companies do not believe they can afford this in-house training investment in modern workflows.

Let us look at a few specific thoughts that might inhibit a smaller company fro m applying the technology and discuss how these issues might be addressed.

“We have never used 3D reservoir characterization before, yet we still make money.”

Some companies unknowingly accept a lower ROR and higher 'project risk' vs. recognizing the “value-added” fro m additional integration. With properly integrated studies, the ROR could have been better. Companies should do a “look-back” on recent projects and evaluate how an integrated characterization approach could have highlighted potential project pitfalls before they occurred.

“We only have simple problems.”

All reservoir management problems are conceptually simple: 1) improve oil and gas rate, 2) maximize recovery, and 3) reduce expense. However, non-integrated decisions can have significant future costs and unforeseen consequences. Integrated 3D reservoir characterization can minimize the capital expenditure and the associated CAPEX risk.

“The 3D geomodels are for new-deep-offshore fields. We only have old fields with very limited data.”

Old fields present significant data challenges-true-but they also have a wealth of historical performance data than can help constrain integrated models. Data challenges from old fields include: log quality, incomplete production data (esp. for gas and water phases), multiwell allocation issues, limited PVT data, limited or lost core and core analyses, no seismic coverage, etc. The integrated modeling process highlights these limitations, but even without modeling these data issues can negatively impact decision making. Commonly, a well conceived data acquisition program involving just a few infill wells, 3D seismic, and/or cased hole logs can go a long way towards improved model constraints and better business decisions.

“Even if we had a high-tech 3D integrated model, our management wants to see drill-here maps.”

Working in a 3D perspective can provide many new insights, but of course 2D maps are easy to generate from integrated 3D geomodels and simulation models. Such maps have the advantage of incorporating several different disciplines' work and data in one model.

“How predictive is this model? Will it tell me where to drill?”

Models are NOT unique. There will be uncertainty because we do not have perfect data sets. The modeling process quantifies uncertainty and reduces risk by integrating all of the available data.

“We have not seen the economic advantage 'proved'. What good is all that extra stuff?” ( OR, “We tried it and it did not work.”)

The literature is loaded with good integrated reservoir characterization examples for geophysics, geology and engineering. Stay focused on fit-for-purpose integrated models, and do not try to use them to answer questions that the geomodel was not designed for. For example, a 1970's 2D seismic acquired for deep 15,000 ft. targets is not the best data set to use as constraint for a shallower 5000 ft waterflood expansion into unswept compartments. A properly acquired 3D survey could be justified for the expansion.

“Those reservoir characterization packages/experts are all about 'technical details'- what we need are real-world results.”

It is important both to frame your specific reservoir questions in business terms and to make the entire team vested in the integration process. However, if you ask the team to create an integrated geomodel to answer only your initial primary depletion questions, then be prepared to update or rebuild the geomodel with additional information 2 years later when you need a quick-answer concerning how many gas/water injectors, where, and by when.

There are many recurrent issues such as: “How much data do we need?”; “How do we build the correct geologic framework?”; “Are the logs properly normalized and interpreted?”; “Can we integrate seismic response?”; “How do we condition to dynamic performance?”; and 'How do we do all this in a timely and cost-effective manner in order to improve business decisions?” Recent geologic modeling software has made the process much easier (resulting in claims such as “build a model in a day” or even “free 3D models”), yet the integration process is still not straightforward, cannot remove all the uncertainty, and of course remains subject to the “garbage in” problem. All these issues continue to contribute to a healthy level of skepticism from end-users of model predictions. If a 3D model is uncertain, does it provide improved decision-making compared to more conventional simplified models (e.g., 2D maps)? Our answer is an emphatic “yes” because we have repeatedly seen the successful application of 3D characterization when properly focused on business questions framed at the outset of the geomodeling/simulation workflow. In other words, always keep in mind that the purpose is to convert the models into dollars.

Hai-Zui Meng

iReservoir.com, Denver

(*The staff at iReservoir.com, Inc. collectively prepared this response.)

Answer 6

Integrated reservoir characterization is the subject I have been involved with throughout my academic and professional career. The first question posed; ‘Why is it not being adopted as routine?’, I can therefore look at with some historical perspective. I actually think it is being adopted as routine, which I will come back to. An alternate question which I would like to pose, and which conveniently links in to the issues involved, is; ‘Why is the level of integration routinely achieved so low in comparison with what is technically achievable?’

Let’s first visit the issue of how we have fared. For this we only need to think of the simulation models being run 20 years ago and those being run today. There is no doubt there has been a quantum leap in simulation model complexity. Today’s routinely used models may still be simple, but certainly resemble the geologic models they have been derived from. They can handle multiple faults and cell density is such that a fair level of heterogeneity is captured. This means that we now have the workflows and technology that enable us to routinely get some level of interpretation and well log information to end up in reservoir simulation models.

What is technically feasible today is a completely other story. It is currently feasible to build extremely detailed geologic models:

- That incorporate a very detailed structural framework built up with dozens or more faults and horizons;

- Are populated with reservoir properties at very fine vertical and lateral detail that honor all available well control and match available seismic AVO amplitude data;

- Consist of multiple realizations to capture reservoir property heterogeneity and structural uncertainty;

- A subset of which is selected through upscaling and simulation to match production data;

- This subset being regularly updated as new data becomes available.

Such a suite of models at fine scale that honor all available reservoir geoscience and production data represents the ultimate that we can achieve today. (For good order, it is more logical to include reservoir simulation and history matching directly with the generation of the fine scale geologic models that honor well and seismic data, but this is not yet a practical option.) In the ideal world, the suite of detailed models generated according to the procedure above is then used as the basis for field development and / or production enhancement.

The investment in cost and effort involved in generating such an all encompassing suite of detailed reservoir models is large, and runs into the several hundred thousand to millions of $’s range, dependent on the volume of available data, the needs for conditioning and reprocessing of input data, and the requirements on the areal and vertical extent and detail of the models. Such investments must be justified against value created by savings on the number of wells needed for a field and higher production per well. In addition, the time cycle involved in such a full study is significant, as are requirements for expert staff. In practice today ‘full’ reservoir modeling studies as described are certainly carried out, but for reasons of cost, timing and available expertise, are limited to high risk / high cost projects such as for deep water field development and production enhancement.

As I have already touched upon, the last 20 years has certainly seen positive development on the integration front in the sense that more geoscience data ends up in reservoir simulation models. However, we are far away from routinely working with the type of detailed, fully integrated geoscience model data sets that can be generated. The drive to integrate continues to be strong, with the goal to make more routine and cost-effective what is technically feasible. Unfortunately, this is where progress is disappointingly slow. However, few of us fully recognize how difficult it all is and how much hard work it takes. Let me raise a few issues facing us today:

- Obtaining consistency between wireline, lab, rock physics and core measurements, data and models;

- Getting (AVO) seismic data to a quality level that seismic amplitudes can be reliably used to extract information on the areal distribution of reservoir parameters;

- Moving data from seismic grids to reservoir model grids and back with minimal distortion;

- Having the integrated IT and application tools to efficiently manage and use all these different types of data and the many versions that are generated in the course of a project;

- Having experts that can do all this;

- Having the management expertise and processes in place to oversee that each piece of the puzzle is good in its own right and fits with the others.

Contractors and clients are working on all these fronts. Also several universities have research programs aimed at pieces of the puzzle. As these issues are ironed out integration will become easier to achieve. However, and this no doubt goes to the heart of the question, ‘Why is it not being adopted as routine?’, is frustration with progress, which to many appears so slow that it is almost at a standstill. Surely what goes into a high-end special project today will become routine in future. Unfortunately I have to agree progress is slow and this future is not close. Nevertheless, we are progressing and we shall get there.

But, in the mean time, the bar will have been raised, and not by a little bit but substantially. At the same time we are progressing with making routine what is at the high-end today, we are being inundated with more and new data. Just think about the developments in downhole continuous monitoring, crosswell seismic etc. Also we should not forget the ever-larger volumes of already available data such as denser seismic, multi-component and time-lapse seismic and more finely sampled logs. As we march on making routine what we can achieve at the high end today, what we can do tomorrow at the high end is changing also.

In summary, on the positive side, we have made steady progress in integration. However, the rate of progress is slow, and today there is a large gap between what is routine and what is possible. In addition, we are continually raising the bar on integration and will always be behind on developing methods and workflows to integrate in new data types and the ever-increasing volumes of data. So, the gap will always be there. For us to derive more of the benefits integration offers we need to close the gap and thereafter keep it short. Closing the gap and keeping it short will require our industry to increase and specifically direct investment into the technology, workflows and expertise that go into making integration a success.

Paul van Riel

Fugro, Netherlands

Answer 7

Hardly anybody in the exploration community will challenge the statement that an integrated reservoir characterization will lead to superior technical results.

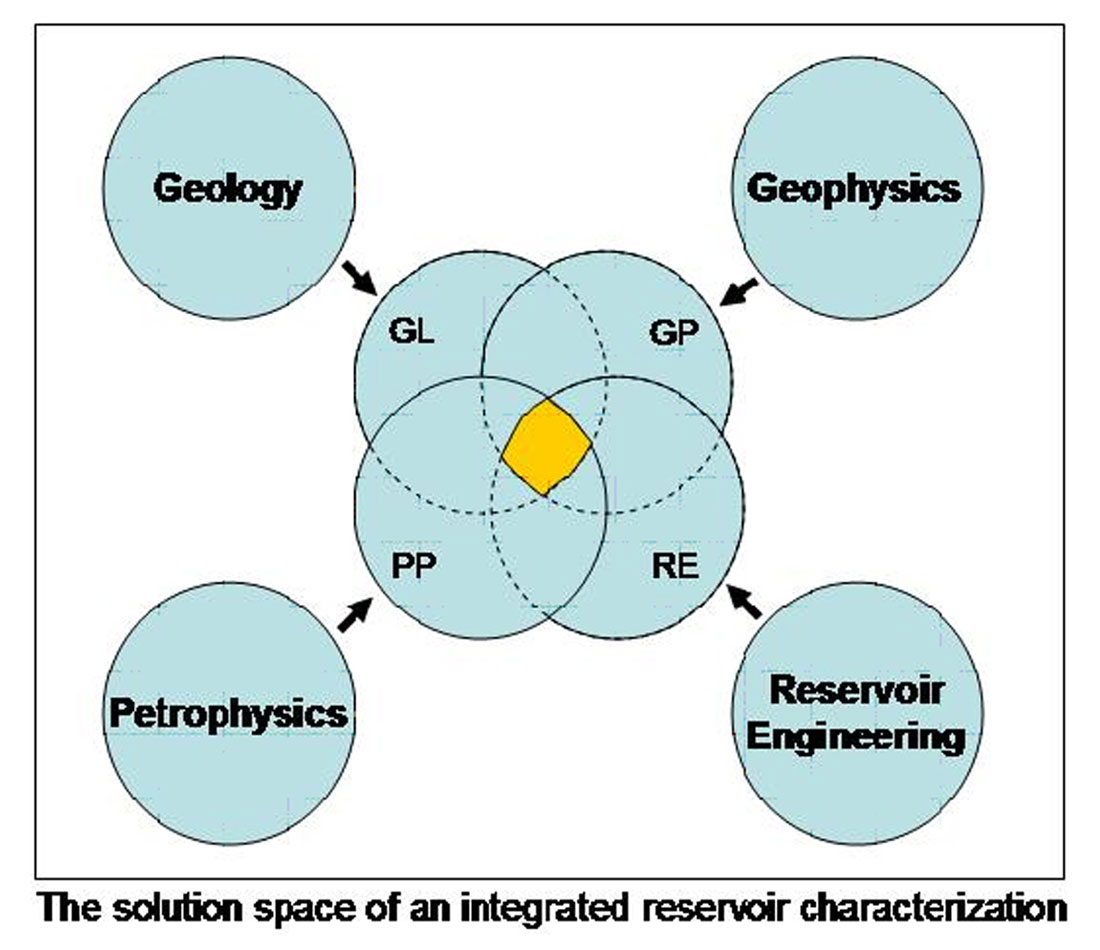

The subsurface can be characterized with independent tools: Geology, Petrophysics, Geophysics, and Reservoir Engineering. Each method has its limitations and uncertainties. Combining i.e. integrating will reduce the solution space to a smaller space consisting of the overlapping zone: Obviously, we arrive at more accurate results.

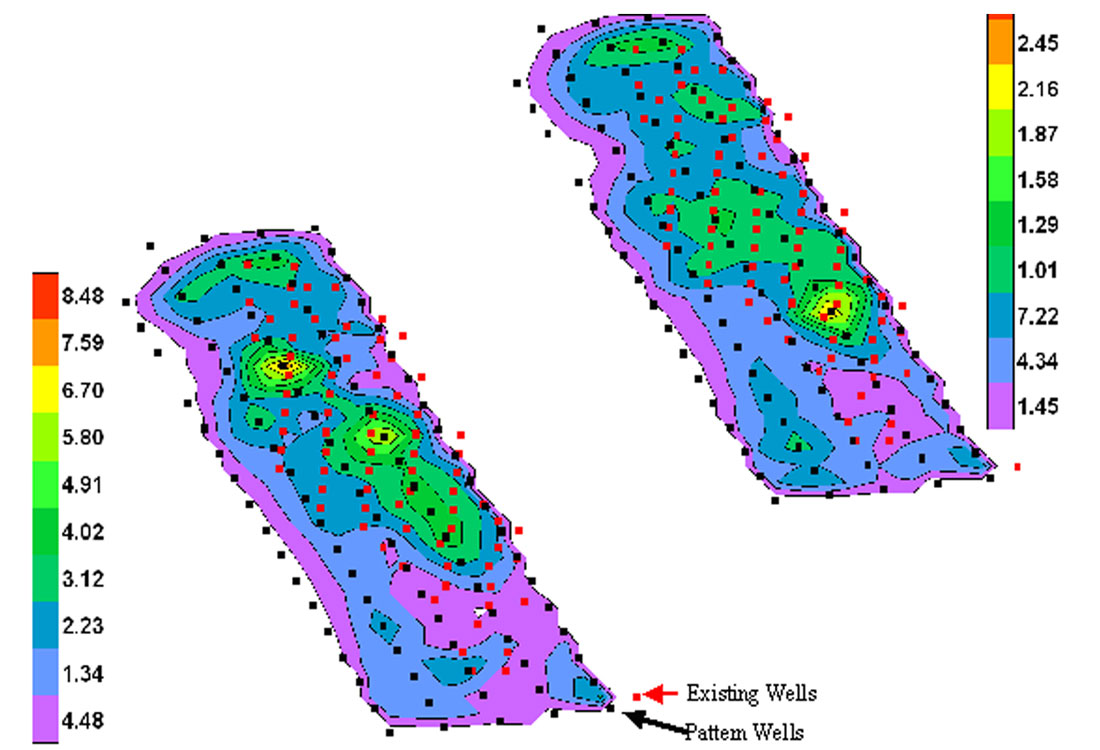

The following graph illustrates this fact:

I have worked on several reservoir characterization projects, but only two were fully integrated. The first project was a North Sea gas field development project which was initiated in the early 90’s and was more than 100 km offshore. The second project is Deep Panuke, another gas development project a few hundred kilometers offshore from Nova Scotia, Canada. Each project required significant investments that can be optimized only if future production can be accurately predicted. This prediction comes at a price and the more accurate the prediction is, the higher is the price tag. The project economics must be able to justify this investment. The most accurate prediction results from a multidisciplinary approach integrating geology, geophysics, petrophysics, and engineering.

Before discussing why it is not done on a routine basis let’s investigate what an integrated reservoir characterization implies.

The least advanced method to characterize the subsurface is by surface geology. The limitation of this method is obvious. The first step of sophistication is the integration of static well data. With increased log density more details can be extracted. However with the increase in log information the geologist needs the support of the petrophysicist to fully analyze the details of the well data. The combined effort leads to good understanding of the immediate neighborhood of the wells. Next we want to understand the reservoir between the wells. Geophysics and seismic provide the tools. However, more expensive 3D seismic data have to be acquired and depending on the data quality, expensive processing like PSDM is essential. Depending on the required reservoir description, structural mapping may be sufficient. However in general, a more comprehensive description using sophisticated methods is required. Soon the question of porosity between the wells will be raised and more complex seismic processing becomes necessary. For example AVO techniques, and in particular LMR, can be successfully employed. The interpretation of this additional geophysical data is not a stand alone procedure. Geological and geophysical results have to be integrated to get a good description of the static reservoir model. In particular, due to scale differences this integration is a technical challenge. In the end regional and local geology, well data and seismic are optimally tied to create the best reservoir model. However, we still have uncertainty in the result i.e. a number of solutions are possible.

Now we are ready for the next dimension of integration: The integration of dynamic reservoir data. This stage requires another team involving engineering experts and production data. However, frequently production data are not available at all or the extent of the data set is limited. The integration of the dynamic data in the static model is a major challenge which requires numerous iterations. Each iteration involves a complete reservoir simulation. After a match of the dynamic data with the static model is achieved one possible solution for a reservoir model is obtained. This procedure reduces the solution space significantly, but the interpreter must be aware that at this stage he is still working with one possible realization. However, referring to figure 1 it is obvious that the solution space is somewhat reduced.

One way which honors multiple solutions and allows estimating the range of outcomes is geostatistics. This approach opens new challenges because each part of the integrated interpretation has to know and to allow for a range of uncertainty.

Only in the last case can we speak of a fully integrated reservoir study, which was addressed in the original question. By definition this excludes exploration projects which do not have production data available.

This brief outline of the integration process makes it obvious that integration is a matter of time, technology and manpower or in other words a matter of invested dollars. In the end integration is less a question of technology but a question of economics. Can the project afford to collect all the expensive data? Can the project afford to pay for a group of experts to interpret and analyze the data for weeks or months or even longer? Can the project afford the time delay which is necessary to accomplish this work? Perhaps we should better simplify and reformulate these questions: Can the expected improvement of the reservoir characterization generate more dollars into the pockets of the E&P company?

With that discussion we can come back to the original question: “Why is integrated reservoir characterization not being adopted as a routine?” An improved reservoir characterization will not necessarily change the economics of the project to being more favorable. Suboptimal reservoir characterization could lead to a poor development plan and this becomes a major concern if we compare onshore to offshore development. Onshore this problem may be solved by relatively cheap additional wells once the production problem becomes apparent. By contrast the prohibitive costs of offshore drilling may cause you to live with the poor development plan and will ultimately leave hydrocarbon resources in the ground.

My experience is that in the offshore case, an integrated reservoir study is done before the decision to proceed with the engineering development is made. However, as mentioned before, a fully integrated study comes only with production data, which are not always available.

Other issues which have not been addressed yet are environmental factors. In densely populated areas, particularly in Europe and Germany, drilling additional wells to make up for a poor development plan are frequently impossible due to surface restrictions. The explorer is challenged to achieve an optimal depletion with a minimal environmental impact and an integrated reservoir study is a key requirement.

Now let me address the second question: “What are the issues in this regard and how is it possible to overcome them?”

Exploration and exploitation are driven by world economics. Hydrocarbons are becoming a more sparse resource and consequently more effort will be put into an optimal depletion of existing pools. This optimal depletion can be achieved only if we have a good understanding of the subsurface, and the best characterization comes with an integrated study. Now, driven by a higher hydrocarbon price, the economics will justify integrated studies not only in offshore development.

Rainer Tonn

EnCana Corp., Calgary

Share This Column