1. Introduction

The upstream oil and gas industry has long advocated and depended upon advanced technologies as a major contributor to its success. Whether it be 3D seismic processing made possible by fast and reliable high performance computing and storage capabilities or the ubiquity of PCs, servers, PDAs and mobile communication devices. Data and information such as reservoir models, well logs, digital data from sensors, production accounting and management information drives the industry. Forecasts show increasing demand for oil and gas, both as an energy source and as a feedstock for petro-chemical based products. This, combined with the certainty that oil and gas is a finite commodity, increases the reliance on the manipulation and management of data and information to improve reserves and production growth, whilst resulting in cost reductions and improving the economic threshold of portfolio assets.

As we start the 21st century, technology is becoming increasingly pervasive, with IBM having significantly contributed to advanced technologies such as Carbon Nanotube Technology, the Relational Database, Reduced Instruction Set Computer, that have all had a major impact on business and society.

This article outlines some of IBM’s current thinking on future technologies, identifies key themes and speculates on their impact on the Upstream Industry.

2. Technology Themes and Trends

Moore’s law(1) has yet to be repealed, IT continues to develop rapidly as improvements in underlying technologies such as semiconductors, materials science and networks continue at exponential rates. In characterising this ongoing explosion of innovation, five key themes will continue to add business value for the next decade and beyond:

Faster – better – cheaper

Moore’s law in action, improved performance at a reduced cost

Intelligent devices

Computing devices will become smaller, more pervasive and less intrusive

Analysis – integration – federation

Managing and organising the exponential proliferation of complex and interconnected data

Resilient Technology

Robust, secure, scalable and flexible infrastructure

Real time business – real time decisions

The Virtual Computer which facilitates enterprises whose business processes are integrated, end-to-end across the company, with key partners and suppliers who can respond rapidly to any customer demand, market opportunity or external threat

Let’s look at each of these themes individually and speculate on the implications for the Upstream Industry.

2.1 Faster – Better – Cheaper

When looking at seismic processing and reservoir simulation, the main need is for faster more accurate processing of increasing volumes of seismic data, together with larger more detailed reservoir models, preferably analysed in real time. It is hoped, better analysis and modelling will improve finding success and increase recovery rates. This continues an explosion of requirements for computer power and storage, within the context of fixed or reducing IT investment budgets.

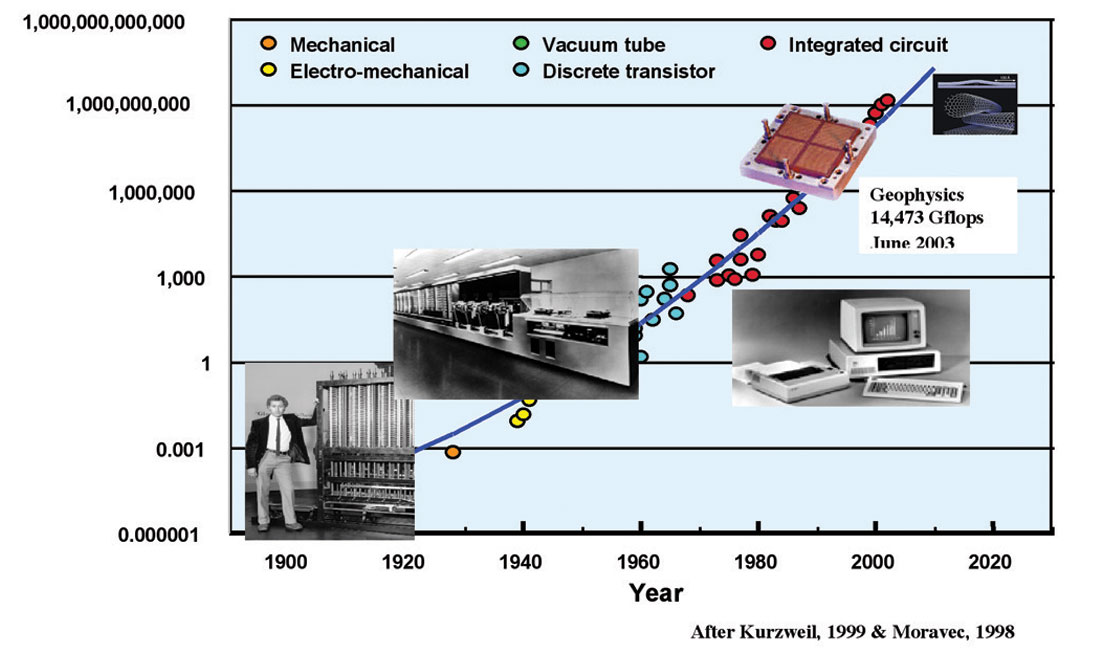

So, what does a $1,000 buy in terms of compute capability? What is it likely to buy in 10 years time? As seen in Figure 1, $1,000 will continue to buy computational capability on an exponential basis i.e. the trend of faster – better – cheaper will continue.

Figure 1 - $1,000 buys:

During the next 20 years, the growth of compute power will correspond to hundreds of millions of years of evolution. Deep Blue, the chess-playing computer that beat Gary Kasparov in 1997 had the compute power of 8 teraflops, equivalent to that of a lizard brain. The current rate of progress estimates by 2015, a supercomputer (and since “yesterday’s supercomputers are today’s desktops”, 2020 for PCs) will have the compute power (not the intelligence) of a human brain.

It is a truism that data storage needs always outstrip capacity. For example, in 2001 a major national oil company had 180 terabytes of disk storage, a 200x increase since 1993. However, in 2002 the organisation acquired an additional 160 terabytes! It has been estimated(3) that the immediate storage requirement for a typical oil company is approximately nine petabytes of data. Taking an extreme example, it has also been estimated that to model a reservoir 100 square kilometres to a depth of 1 kilometre at a resolution of one metre would produce a yottabyte (1024 bytes) of data. So what is the future of storage? Will we see similar trends?

Magnetic storage density has experienced enormous growth rates of 100%/year in the mobile HDD segment. Again Moore’s Law continues to operate, as recent IBM research projects validate. One such project termed as Collective Intelligent Bricks (CIB), is a concept of using simple storage “bricks”, each containing a microprocessor, a small number of disk drives and network communications hardware to provide a data storage system that scales to petabytes of storage(4).

Intelligent Devices – Smart Dust

We are all familiar with the increasing trend in miniaturisation and modularisation; PDAs that offer increasing sophistication and processing capability, wearable computers and the increasing sophistication of sensors. With improvements in microchip design and increasing density of transistors on a chip, this trend will continue. By 2010 Gartner predicts(5) that there will be three embedded devices for every person worldwide. In 2001, the University of California completed the Smart Dust project(6) to explore whether an autonomous sensing, computing, and communication system could be packed into a cubic-millimetre mote (a small particle or speck) to form the basis of integrated, massively distributed sensor networks. This was successfully demonstrated in a defence environment and by 2010 they believe this technology will be ubiquitous.

From an oil and gas perspective this type of technology could have significant applications in the lifetime management and maintenance of facilities and pipelines, i.e. the coating on the pipeline could sense and communicate its status and integrity, as could other physical assets.

2.3 Analysis – Integration – Federation

Data and information is the lifeblood of the oil and gas industry, forming the basis for all value adding decisions. Statistics demonstrate geoscientists spend 50-60% of their time finding and validating data. For an industry that relies heavily on good data collection and management many challenges still exist:

- Exponentially increasing data volumes, including machine generated data

- Ensuring data integrity

- Collating, combining, assimilating and analysing numerous heterogeneous data types

- Effectively, efficiently and securely transmitting data within the office and around the globe

It is a paradox that it is now easier to (find and) access a web file created by a child in New Zealand, than to access a file created on your colleague’s desktop. One of the major trends (and problems) in business is the deluge of information sources available to any computer user. The question is how to find the information that I really want and how to utilise it?

Information available to businesses is increasing rapidly with online data growing at nearly 100% per year, and medium and large corporate databases at nearly 200% per year. We are also undergoing a change in the type of data we are collecting and utilising, moving from transaction and structured data, (i.e. that traditionally has been captured in a database) to text and other authored and unstructured data, such as from sensors or multimedia, which are not amenable to traditional database architectures.

‘Smart wells’, ‘electric fields’, ‘intelligent oil fields, ‘the Digital Oilfield of the Future’ are ideas and concepts that are currently being piloted by a number of oil companies. These initiatives exemplify the trend towards capturing and making decisions based on machine-generated data. The goal of these initiatives is to link the field development process (i.e. the sub-surface characterization) to actual production in order to maximize business value. Using existing sensor and automation technologies, large volumes of data can be collected from the well bore, i.e. temperature and pressure, and other production facilities. As identified above, the key challenge is to turn this continuous data stream into meaningful information.

The quantity and information density of machine-generated data will require more intelligent access methods. For instance: a production manager will want to know whether temperature and pressure variations in a producing well indicate that sand breakthrough is imminent and that remedial action is required; the reservoir engineer would like to aggregate and analyse in real time the performance of the wells and look for trends and patterns of behaviour that can assist with optimising reservoir performance. They don’t necessarily want to search terabytes of data, instead algorithms need to be developed allowing them to quickly find the most noteworthy data and perform further analysis to satisfy their needs. As part of IBM’s Intelligent Oil Field solution, using experience and algorithms from within and outside the oil and gas industry, we are employing our researchers to solve these very problems.

Varied control and ownership of data sources will continue to drive information integration to a federated versus an aggregated model. For instance in the continuing need to expand upstream activities into Eastern Russia and Offshore West Africa where seismic, well log, production and other data is required across and in some instances, outside of the enterprise. Data federation applications such as approximate query and automated catalogue generation will support this need as will the emergence of the On Demand enterprise.

2.4 Resilient Technology

Increasing reliance on technology will require robust, scalable, secure and flexible infrastructures that protect businesses from security breaches, at the same time facilitating easier business, partner and networks interconnectivity. To achieve this, these computing infrastructures will grow beyond the human ability to manage them. Consequently, there will be a trend toward s Autonomic Computing, i.e. self-management technologies that provide reliable, continuously available, and robust infrastructure .

Specifically, IT autonomic systems will be:

Self-optimising: designed to automatically manage resources to allow the servers to meet the enterprise needs in the most efficient fashion

Self-configuring: designed to define themselves “on the fly”

Self-healing: automatic problem determination and resolution

Self-protecting: designed to protect itself from any unauthorized access anywhere

All of IBM’s products are now being designed with autonomic features built in, making the individual components more reliable. In addition, IBM and other leading software companies are delivering intelligent software to ‘orchestrate’ entire systems, dynamically adapting the configuration and behaviour of the system based on business policies.

2.5 Real time business – real time decisions

The technology trends summarised above will support and drive businesses and enterprises to become ‘real’, real-time organisations. Businesses will become On Demand; strategic business decisions will be made and based on a continual flow of new information. This will require business processes to be integrated, end-to-end across the company with key partners and suppliers to enable the enterprise to respond rapidly to any customer demand, market opportunity or external threat.

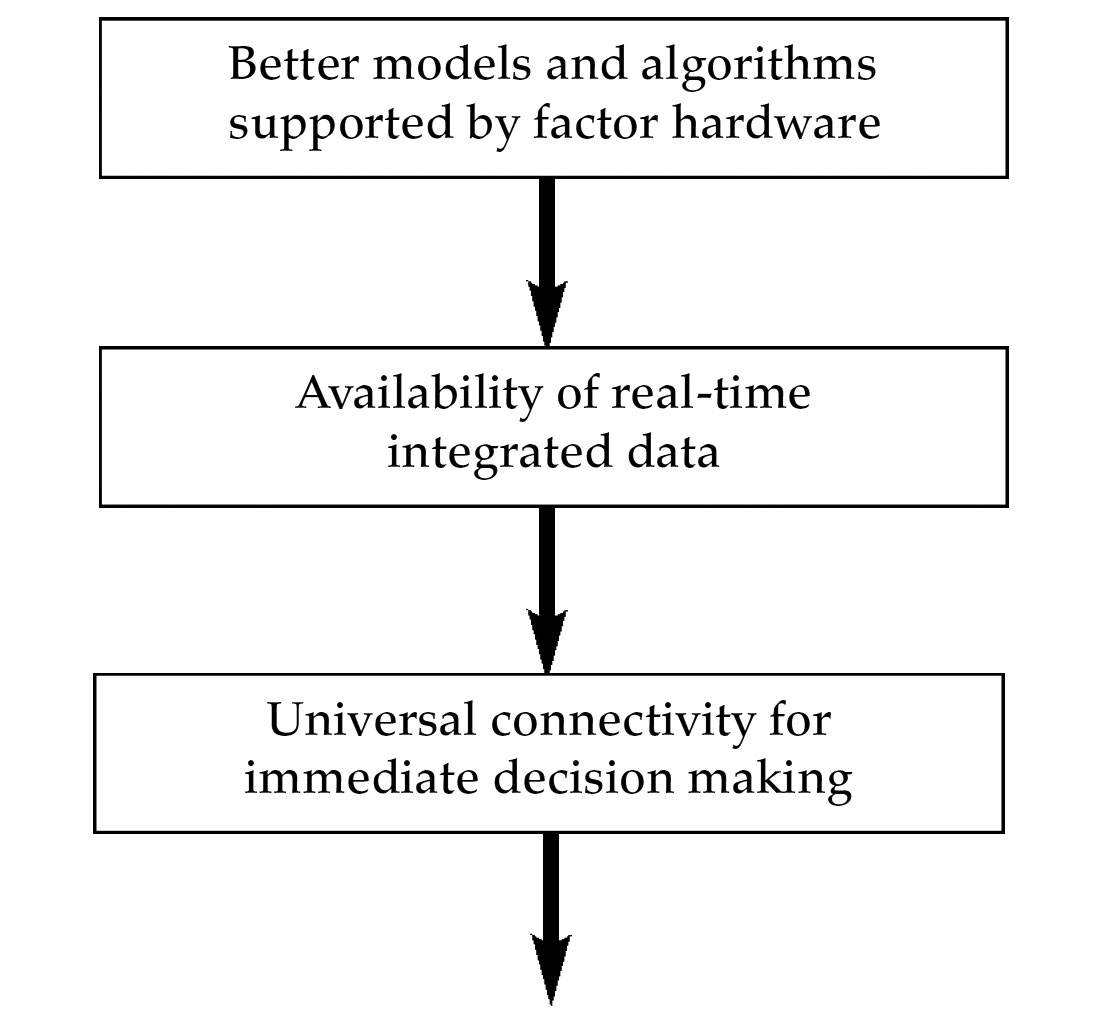

This will be facilitated by:

Technologies such as web services and GRID computing will facilitate complex sets of distributed services that will appear as though they exist and run on a single “machine”, a virtual computer. New applications will be written for the virtual operating system and compute and data engines that will provide easy, rapid integration of business processes and information. How are these On Demand technologies impacting the Upstream Industry now?

Reservoir characterization blends science with graphics art, using the insights and experience of a range of professionals. The ability to share a common view of the reservoir, together with the assumptions used in its characterization, leads to better decision making about its likely behaviour and optimal way to effectively maximize production. This environment will be supported by remote visualisation systems that can operate over bandwidth-constrained networks.

With the variation of workloads due to fluctuating oil prices, do you invest in IT for peak production or for a smoothed demand profile? GRID and on demand computing techniques help address this business problem. GRID is distributed computing platform over a network (i.e. the Internet), linking servers, clients and storage to dynamically form virtual servers and storage pools, supporting the creation of virtual organizations, both adhoc and formal. GRID is based on open standards such as Open Grid Services Architecture, which help protect infrastructure investment from shifts in technology and changes in business models. In the upstream environment, GRID can deliver uniform computing systems and optimise IT assets for all sites currently performing geoscience, engineering, technical and traditional back-office business computing.

3. Conclusions

Technologies have and will continue to dramatically change the face of the Upstream business environment and will continue to evolve from providing ‘back-office’ supporting functions to definitive mission critical business requirements that deliver real business value and support changing business models. IBM believes the high level trends described will facilitate a transformation of this industry; the question is when will this occur and how long will it take? The inhibitor to transformation will not be technology but will be cultural, organisational and above all human.

The Canadian petroleum industry itself is facing its own challenges in managing the intrusion of more and more technology into all areas of the business. The need for collaboration between multiple parties situated anywhere in the world, the importance of real-time information to optimise production operations, the increased need for reliable corporate reporting tools - these are just some of the drivers behind the raised profile of technology in today’s industry. IBM is working with our clients to help them collect, manage and interpret ever-increasing volumes of data and to transform that data into information, knowledge and, finally, corporate wisdom.

Join the Conversation

Interested in starting, or contributing to a conversation about an article or issue of the RECORDER? Join our CSEG LinkedIn Group.

Share This Article