Introduction

Large scale visualization systems have moved from the fringes of our industry to become integral to daily workflows at many oil and gas companies. Visualization is not about visualizing our own data. It is about how we share knowledge with each other. It is a key element in interdisciplinary collaboration. In this article, we will examine the ways in which visual collaboration leverages the ways that humans perceive the world around them.

The geophysical industry's challenge

The demographics of our industry are not good. A dwindling body of aging geoscientists is asked to find more reserves every year. The reserves we have to find are harder to get at, and are found in increasingly complex environments : geologically, politically and economically. As a result, fewer and fewer geoscientists have the broad and deep skill sets required to be effective solo explorationists. This has lead to use of asset teams, in which a group of experts collaborates to produce team decisions. Whilst this approach has yielded good results, significant inefficiency exists at all the interfaces between hardware and software, and between humans with different cultures and professional experiences. The challenge to the geophysical industry (and most other knowledge based businesses) is how do we take a team of individuals, and extract a team performance that is in excess of the sum of the individual parts?

Visual collaboration today

Interpreters don't need visualization to interpret data. It may be a useful aid, but most successful interpreters are, in part, successful because they can assemble complex 3D models in their heads as they examine their data. Visualization really adds value when the interpreter has to share the fruits of his labor with other geoscientists, geologists, drillers and partners who may not be so familiar with the dataset.

If well plans are presented in plan and elevation views, and interpreted surfaces are presented as maps, drillers and geophysicists waste time building a shared mental model of the subsurface. The simplest integrated 3D visualization showing the surface and the well path can provide an instant basis for mutual comprehension.

Let's look at some of the techniques that can enhance a visualization, and even create a "virtual reality". Each of these techniques will improve the effectiveness of sharing knowledge through visualization, but the cost and suitability to a task should always be considered before investing in any of these technologies.

Big pictures: Thousands of years ago, human predators (and prey) ranged in size from a mouse to a mammoth, so our cognitive system has evolved to give most attention to objects within an order of magnitude or so of their own size. Squinting to interpret a one pixel anomaly on a 19 inch monitor is not natural. Human scale images are easier, and more comfortable to interpret.

Immersion: If a user is presented with multiple information streams, some part of the brain will process each one. Thus if you work on a computer screen, part of your brain is processing the view through the window, another part is identifying the people passing in the corridor etc. In order to apply 100% of the user's brain to a task, the user must be immersed in that task. Thus, the effectiveness of a visualization can be enhanced by extending the screen to fill the users' field of view. This can become very expensive, so at the very least, parts of the users' field of view that are not covered by the visualization should be free from distraction — dark surroundings etc. Compare a movie theater with a TV. The movie theater has a large screen, surrounded by darkness, and the screen is shaped roughly to fit your field of view. The result is that we lose ourselves in the movie at the theater, resulting in a much more compelling experience.

Stereo vision: Humans have two eyes to aid in the perception of spatial relationships. Although we have other techniques (motion parallax, depth of field cueing etc.) the rendering of separate images to each eye is a valuable tool in understanding complex spatial relationships.

High Frame Rates: When we examine an object in real life, we hold it in our hands and turn it around, or we walk around it to seek new perspective. In order to fool the brain into accepting the reality of an image, the computer must be able to sustain around 10-15 frames per second. Many applications can support this sort of frame rate, though it can become a big issue if any remote visualization is required.

Head Tracking: Many new applications now support the rendering of a scene dynamically from the user's changing point of view. Head tracking systems are now available, that can inform the application of the user's location, and allow it to create a correct rendering for the user's current position. Of course, this has the disadvantage that other (non-tracked) users see a distorted image. The cost of wireless tracking systems is coming down, but this remains an expensive option for many applications.

Cognitive seams

Every time we refocus our attention, the brain must reconfigure to the new task. When a reader is interrupted, he often has to backtrack to re-read the last paragraph. Similarly, every time a user shifts his attention to a control panel or menu, and back to the data, cognitive power is wasted. Today, these cognitive seams litter our visualizations. The next generation of applications will use technologies such as voice user interfaces, and truly integrated visualization to enhance the effectiveness of visualization.

The other 4 senses

One common problem with visualization is 'overload'. We can represent multidimensional data using size, shape, color, intensity, opacity etc. but eventually, we run out of visual attributes. One way that we can communicate even more information in real time may be to use the other 4 senses.

Most applications use sound only to indicate alarms. Even though hearing is a "lower bandwidth" sense, it has a full 3D capability, and is capable of much more. Serious research is going on in several places to apply sound and touch (haptic feedback) to aid in seismic interpretation. There are even commercially available devices to generate smells, and it is not hard to see how sound, touch and even smell may become natural extensions of visualization for the interpreter, though at this point, the use of taste would appear to be restricted to traditional dietary applications!

Trust and collaboration

Working successfully with others involves building trust. People work best with people they know they can rely on technically and commercially. Humans have evolved a complex social protocol to build and continually verify trust — hand motions, eye contact, tone of voice, hand shake etc. One of the downsides to stereo visualization is that the stereo glasses can often restrict or eliminate eye contact. This can be addressed by alternating periods of stereo visualization with conventional viewing. New technologies are being developed for the mass entertainment industry that may eliminate the problem altogether by permitting stereo without glasses.

Issues of building trust are more difficult to address in a remote context. When collaborating with partners located in a separate building, trust is even more important, because it is less likely to be affirmed through daily contact, yet most collaboration methods (Net meeting, telephone, video conferencing etc.) do little or nothing to address this important need.

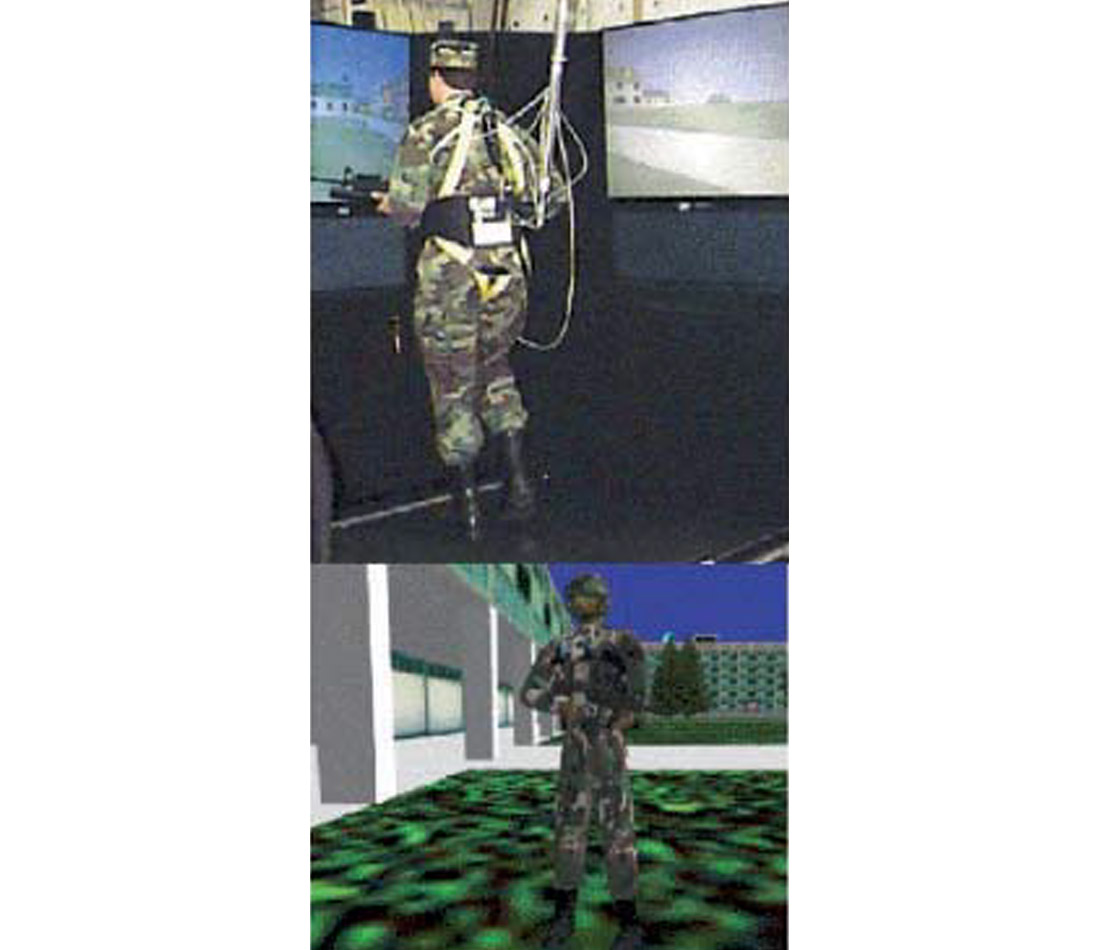

Advanced military simulations, and the next generation of video games all support the insertion of avatars into a scene. An avatar is a virtual depiction of a real user. This could be a simple static image, or it could utilize full body tracking to render real-time 3D images of all the users within the shared visualization environment. The tracking systems and communications required to accomplish truly life-like human renderings are prohibitively expensive today, but in the future, we can expect to hold virtual meetings inside our hydrocarbon reservoirs, with attendees physically located all over the world. This and other remote collaboration technologies were discussed in an excellent article in Scientific American in April 2001.

Conclusions

Technology is finally adapting to the way that humans work, rather than the historical pattern of humans adapting to new technologies. Visual collaboration is already a cost effective technology, provided that systems are selected to meet real needs. However, what we see as visual collaboration today is a shadow of what is yet to come — as those famous Canadians Randy Bachman and C.F. "Fred" Turner once said, "You Ain't Seen Nothing Yet".

Acknowledgements

Many of the ideas in this article were generated or brought to my attention by Roice Nelson, Stuart Jackson, John Pixton, Craig Petersen, and other former colleagues at Continuum Resources. "Virtually There" by Jaron Lanier appeared in "Scientific American" in April 2001, which led me to much exciting research at Michigan State University, University of Washington and elsewhere.

Join the Conversation

Interested in starting, or contributing to a conversation about an article or issue of the RECORDER? Join our CSEG LinkedIn Group.

Share This Article