Geophysical data compliancy means only using data you are entitled to use for an implicitly defined purpose and timeframe. Geophysical / seismic data is complex, as it is an image of a defined position on the earth. That representation is multi-dimensional, multi-faceted and geographically based consisting of raw and interpretation data. Adding to this complexity – that the data resides on different media types and is in different formats, stored internally or with an external storage facility – and the entanglement grows. If that isn’t enough, the oil and gas industry is an ever changing and convoluted environment, due to merger and acquisition (M&A) activities, regulatory obligations, contractual laws and obligations to other exploration companies. Let us explore how corporations can use technology and process to manage this complex dataset and achieve governance.

As artificial intelligence and big data begin to gain traction and create success in the information management realm, allowing corporations to make more informed and educated decisions, the data management processes must adapt and modernize as well. Data management must change to keep pace with the demand for answers on the legal rights and ethical obligations. Validating data and information within a corporate environment can seem like a monumental endeavor; however, technology can contribute to the greater success of these projects by providing the ability to sift through large volumes of data, allowing data analytics to provide insight and enable the consistent monitoring of the environment to ensure your company becomes and remains data compliant.

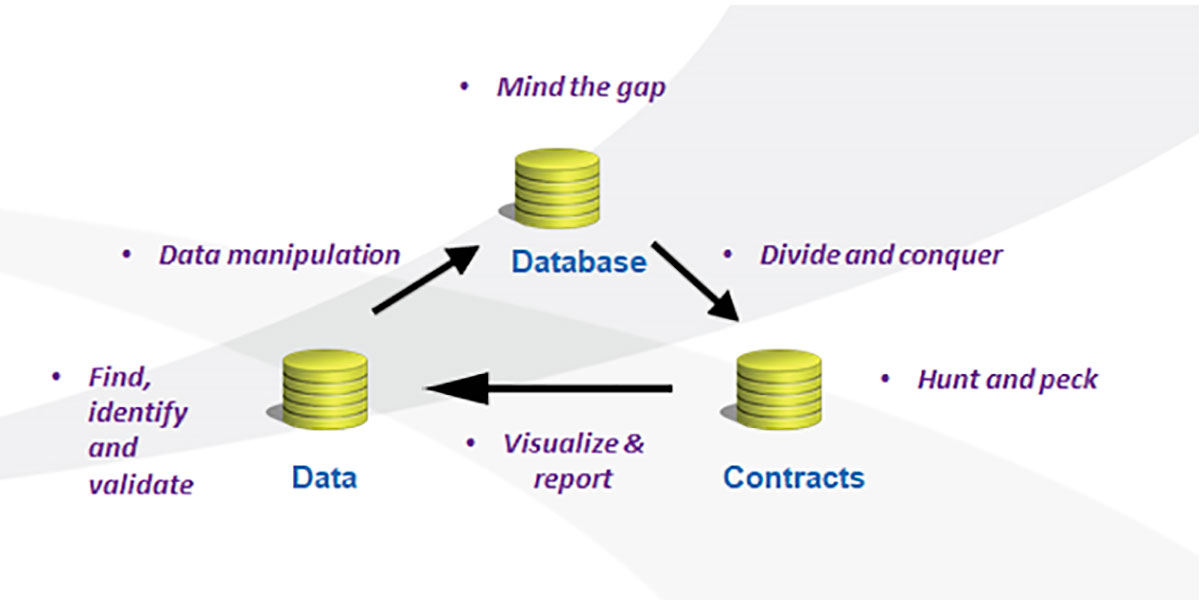

One such data model breaks down the overall compliancy progression into a 6 stage process. When those 6 stages are applied in a continuous cycle, this results in a fully governed dataset meeting all the criteria of governance. The 6 stages consist of 3 asset stages and 3 transitional stages. All stages must be completed; though stages may occur simultaneously; in order to achieve compliance. This model is different from others as it drives the belief that a corporation can never really achieve compliancy without relying on technology, visualization tools and repeatable monitoring systems. The three transitional stages are the connectors, which allow a project to move through the overall data compliancy process and link the three asset stages together. We refer to these transitional stages as: Divide & Conquer, Visualize & Report and the finally, Data Manipulation.

Asset Stage 1 – Database

The Database is the first asset stage. This is where the data compliance process begins and ultimately allows a corporation to move into a long term data governance environment. It is the single point of access and source of truth. As the data management domain adapts and modernizes, the applications it utilizes must also adapt and change. Given the trend and shift towards artificial intelligence (AI), data management suites should incorporate AI, understanding the data through both AI and analytics and present the data using dash boarding, not rows and columns. It is at the first asset stage where the analytical tools must be applied to the database. If your current data management application does not have this capability integrated, the IT department will certainly assist in the selection of the appropriate external analytical tool to implement within the corporate environment. This step will be critical in the latter portions of the compliance process.

Transition Stage 1 – Database to Contracts (Divide and Conquer)

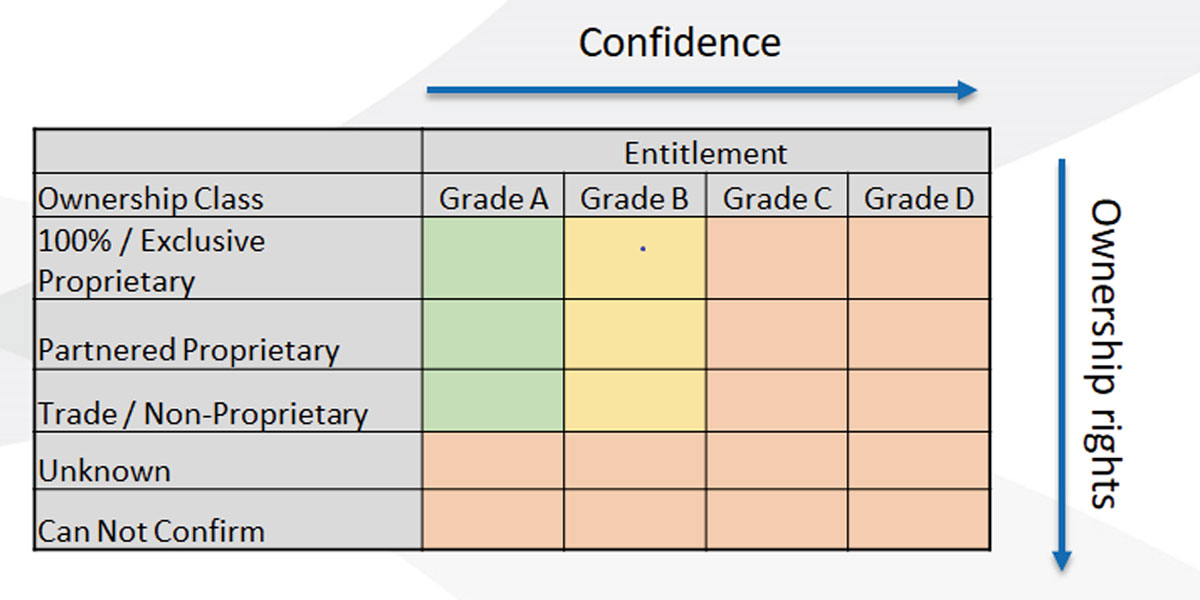

The purpose of the first transitional stage, Divide & Conquer, is for the corporation to properly understand their legal risks and exposure and to apply mechanisms to mitigate these risks. Often confused together, Ownership and Entitlement have different definitions. Ownership is simply asking the question, is the data owned or licensed? Entitlement focuses on the contract and the proper use of the data. The critical component of this transition stage is establishing an entitlement rating matrix. With assistance from the legal department, a rating matrix allows for the understanding of how a survey has been classified, the confidence of that classification and therefore the confidence in the use of the data. Historically contracts are inconsistent and may be missing critical information pertaining to the asset, creating ambiguity and risk. These risks increase liability which leads to increased exposure to lawsuits.

Once a rating matrix has been implemented, it’s time to start analyzing the database and grouping the information into ‘“similar / like” ownership. The second asset stage, Hunt & Peck, is the time to analyze the contracts, master license agreements (MLA’s), AFE’s etc., to understand how the data has been acquired and to know what documentation and agreements are required for proof.

Asset Stage 2 – Contracts

The second asset stage is the Contracts, which are the source of entitlements. The critical component of this step is to locate all contracts. This is often highly problematic, as contracts are usually managed within different departments such as accounting, land, legal, the business itself and the records team. A clear understanding of internal department record taxonomies and access (even if only read access) to their documents are crucial for this stage to be successful. With assistance from the IT department, crawling technology can be used to can help in this endeavor.

Once contract access has been established, determine specific business rules around what data the crawler is to look for. As expected, companies have a large number of files and documents; defining well targeted business rules is key to minimizing the number of drives and documents that the crawler would need to analyze. This in turn will help to reduce scope and can produce faster and more meaningful results. Physical or previously scanned documents should be processed through an optical character recognition or OCR application. This will enhance the crawlers’ ability to extract information automatically from the documents for easy population into the database and reduce some of the traditionally manual, labor intensive methods. While OCR tools have made great strides over the past decade and there are many to select from, not all OCR applications are created equally. Make sure the IT team does its proper due diligence prior to selecting a program. The purpose of an OCR tool is to extract as much information from the document in order to minimize the manual workflow; for the greatest success, the system will require upfront training for the AI / OCR tools in order for it to understand the type of documents it is examining and what information to extract. The more training provided, the faster the tool will become at recognizing the differences. Programs such as these are merely a mechanism to assist and reduce time and manual effort in the workflow.

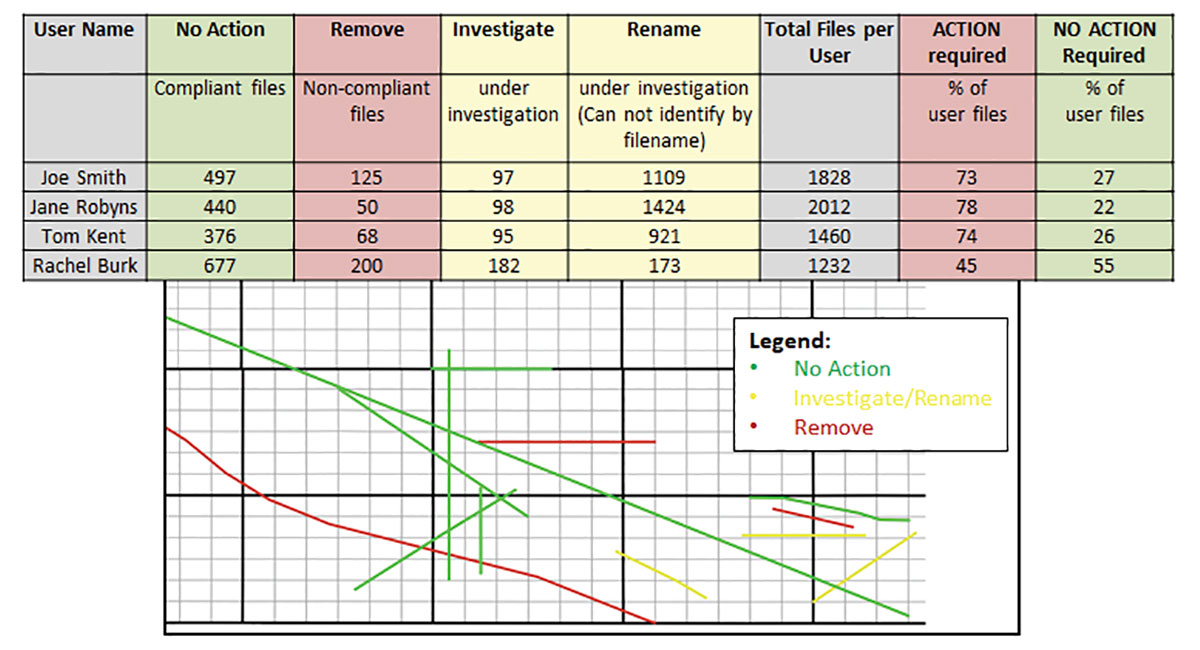

The geographical representation pictured above represents the data the corporation can use, the data it cannot use and which data is still under investigation. The color coded report identifies each user, the total number of files in question, and the magnitude of risk to the corporation should data be used without entitlement.

The entitlement rating matrix will provide assistance while validating existing contracts, when information on the ownership / entitlement documentation is scarce or ambiguous. Another challenge to the data quality is that users can alter file names, breaking the relationship between the data and the associated contracts in their personal projects. If there is no mechanism to link the two back together, this creates an environment of risk for the corporation.

Asset Stage 3 – the Data

The last stage is the Data, consisting of two segments, physical data stored externally and digital data stored internally, which is where the Geophysicist will interpret the data. Each segment is handled in the same manner. Internal data often experiences more challenges than external data given that a level of participation is now required from the user community and data filenames historically can and have been manipulated. This stage is where the entire corporation’s effort comes together with the purpose of comprehending the big picture. Utilizing a GIS/visualization program to display the information gathered is a great, effective way for people to understand the magnitude of the complexity, severity and liability of the corporate internal data environment. It also provides a picture and a clear end path of achieving data compliance.

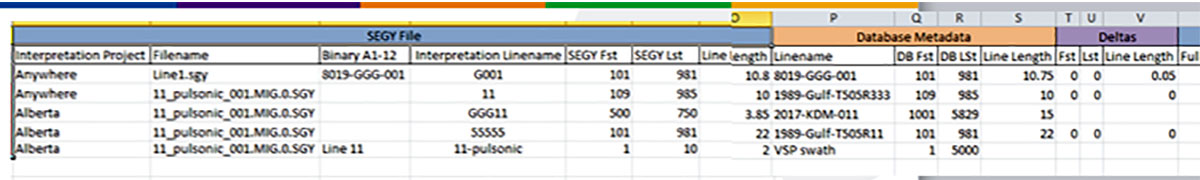

Tools can be scripted and used to interrogate the contents of an interpretation project and the SEGY files themselves in order to ‘scrape’ the metadata from the binary, the EBCDIC and the trace headers to identify unique identifiers. This information can then be matched to the source of truth (database) and source of entitlement (contracts), and when compared to the navigational data can provide a level of confidence that the located data is accurately assigned to the correct survey and contract in the database. User intervention and participation is imperative as reporting mechanisms can only identify the likelihood that something is a match. The information provided within the report must still be validated and either accepted or rejected. Once accepted, the data will then be manipulated and linked so the data, the contract and the database can operate as an integrated asset.

Transition Stage 3 – Data to Database (Data Manipulation)

The purpose of the final transitional stage, Data Manipulation, is to permanently label data the corporation is entitled to use. This is achieved through investigation and the compliance process.

The reality is data comes into the user environment in multiple formats, on multiple devices and media. Common practice may be to rename a line when it comes into a corporation, breaking the relationship between the contract, the database and the data. This may create a situation where the seismic file names no longer have a reference to the contract or database line names.

If the relationship between the seismic line in the database and the seismic data is broken, scripts can be used to interrogate the data within the interpretation project and the SegY files to rebuild the relationship, using the embedded metadata data.

Tools such as Python or FME can be used to scrape metadata from the binary, the EBCDIC and the trace headers to identify first and last shot points, line name and unique identifiers. GIS systems can calculate line length or square kilometers, and proximity routines can be used to establish spatial probability.

The results can then be evaluated against the Source of Truth (database) and against the Source of Entitlement (contracts) to identify and resolve what the data is. This creative approach, using existing systems, can determine and ultimately rebuild the data to a database contracts relationship.

Once you have rebuilt the relationship between the data, the contracts and the database, the next step is to write a digital tag in the binary header consisting of the line name as recorded in the database along with the contract file number(s). Utilizing the binary header secures the information, as it is difficult to alter, unlike the ASCII text within the EBCDIC header or the filename. The imbedded digital tag (line and contract number) in the binary header, allows the crawling application to easily locate the seismic name and contract number, regardless of the interpretation file names, and to identify if the corporation has the entitlement to use the data.

Continuous monitoring, reporting and manipulating of the data (data compliancy) in the user environment is a critical step to ensure the corporation moves to a data governance state. Data is dynamic and is constantly acquired, moved, renamed, divested. A governed environment supports the corporation in a positive legal position.

In Alberta, as in many other jurisdictions, it is the accountability of the user to be certain about data use entitlement.

“APEGA members who fail to consider, or who disregard, the right and obligations of data owners or licensees could place themselves in a position where their actions might constitute unprofessional conduct or could result in legal liability.” https://www.apega.ca/assets/PDFs/geophysical-data.pdf

Conclusion

To ascertain compliance, ask these guiding questions. Is there compliance in the use of data within the corporation? Is there confirmed proof of entitlement to use the data and in the manner for which it was intended? Is the data audit-ready?

To be fully compliant, the data must be measured against the database as well as the contracts. A six stage compliancy process, resulting in a data governance state, is required to constantly monitor the digital environment to minimize and mitigate risk.

Becoming a data compliant corporation is no small feat and active participation from many departments is required in order for its success. Apply technology wherever possible, as it makes visible the complexity of the situation and can minimize labor intensive processes. Upfront effort and costs may be required, however the long term gain and sustainability of the corporation’s data governance achievements will rely heavily on the consistent monitoring and reporting established through this process today. Changes to information acts, copyright laws and professional standards are making data compliance critical and imperative that corporations are taking the right steps to ensure data used, meets strict contractual obligations.

Join the Conversation

Interested in starting, or contributing to a conversation about an article or issue of the RECORDER? Join our CSEG LinkedIn Group.

Share This Article