What Geo-Science Data Architecture Can Do For You!

Preface

Informational technology (IT) in the last couple of decades has considerably changed our lives and how we interact with the world. The IT revolution has forced each of us to learn new skills in the workplace as we attempt to keep abreast of technological innovations. The title of this paper however provokes an interesting question, one that should not be taken lightly nor dismissed for its apparent sensationalism. It is a rather simple question to ask but anything but a simple response ensues. Are you managing your informational technology (IT) solutions or is IT still managing you? The answer is rooted in the issue of control. Who or what has the control could determine whether technology passes you by or whether you grasp a hold of it and make it work for you. It could be the difference whether your company truly leverages IT technology or whether IT is just the cost of doing business. To take control, a certain amount of knowledge about informational technology is a price of admission requirement. This paper is designed to provide the geo-science reader just that; - a broad perspective about informational technology as applied to geology and geophysics within the oil and gas industry along with some fresh, new insights that may positively impact productivity and ultimate value to a corporation. This paper frames this discussion in the context of geo-science data architecture.

Background Information

Over the last couple of decades, the geological and geophysical disciplines within the oil and gas industry have witnessed many technological changes that showcase the evolution of information technology. Large mainframe computers have been replaced by Unix based workstations or personal computers (PCs) using a Linux or Windows based operating system. Rather than hand timing seismic sections as was the case twenty years ago, interpretive software now permits the accurate timing of seismic profile sections and the posting of values onto a map. Programmed with the capability to facilitate the manipulation of the data and extract additional information, the interpreter now uses interpretive software to measure properties of the seismic data and to provide a better quality understanding of the sub-surface. Data that was normally provided in hardcopy form is now provided digitally from various service companies through secured internet portals or FTP sites. Well-log data can be transmitted via a satellite network to the desktop of the geologist. Access to data, reports and information via the internet has permanently changed how people handle and store data and information. Gone are the central libraries within oil and gas companies that faithfully archived this information. Even communication between people or business units is now facilitated readily by e-mail. Documents are routinely attached to correspondence rather than photocopied and couriered to the other party. Clearly, much has changed. Numerous additional examples can be cited to prove the point. Information technology has altered how business is conducted and how people conduct their work.

During this time period, spending on informational technology ballooned as companies who viewed technology as a strategic advantage, invested capital and human resources to ensure their competitiveness. Most corporations created entire IT departments that focused upon accessing this technology. As the potency of IT increased, so did its ubiquity. An article written by Nicholas Carr, entitled IT Doesn’t Matter, published in the Harvard Business Review magazine in May of 2003 encapsulates the evolution of information technology. In what is being described as a seminal piece, Nicholas Carr contends that it is not the ubiquity that provides strategic value but its scarcity. He contends that what defines a competitive advantage is the ability to do something that a competitor cannot do. As informational technology becomes more common and accessible, the strategic value of IT decreases. Proprietary technology and internal strategic advantage gives way to infrastructural technology with shared open access as an integral part of doing business. The lack of standards provides an entity possessing a proprietary technology a competitive advantage, whereas the presence of industry standards for an infrastructural technology levels the playing field and equalizes things for most companies.

Carr contends that as an infrastructural technology, IT simply transports, processes and stores data and information. IT has progressed from a proprietary technology twenty years ago to a commodity that is easily and readily accessible. As information technology spreads, the cost of acquiring the technology drops, standards evolve and the strategic advantage to a corporation diminishes. In the July 2003 edition of the Harvard Business Review magazine, just two months after Carr’s article, a rebuttal by John Seely Brown asserts that IT by itself seldom provides strategic differentiation. IT provides the opportunity, possibilities and the option to separate oneself from the competition. Companies that act upon these possibilities will differentiate themselves from their competition. Although the technology may be ubiquitous, the insight to harness its potential is not evenly distributed. “Companies that mechanically insert IT into their businesses without changing their practices… will only destroy IT’s economic value.” For this reason, Brown contends that IT spending has seldom led to superior corporate financial results. As Carr asserts, IT has become a negative enforcer that has become a cost of doing business. To be without it would mean to be uncompetitive. Brown contends that the competitive advantage in today’s IT environment is to know how to harness its capabilities and change the way of doing business by establishing new business practices. However, the ability for IT to create economic value and the insight to organize technological components into value adding architectures, is in short supply. Brown contends that competitive advantage is maintained by those who successfully do just that. They harness today’s technological capabilities, thereby truly leveraging technology.

Within the oil and gas industry, IT departments and software vendors expounded the utopian solution of IT applications. IT applications and system hardware were sold as panacea solutions to maintaining corporate competitiveness. Maintaining competitiveness was portrayed as the requirement to obtain enhanced functionality with ever faster hardware, equipped with operating systems that facilitated computational speed and data transfer. This utopian dream quickly evaporated as corporations realized that data format, data transfer, file import and export, hardware compatibility, operating system compatibility and even employee education, were all factors in deploying information technology effectively. The competitive advantage of IT was no longer an access issue for those who could afford it; it was fast becoming an implementation problem.

The geophysical discipline is a mathematical based science requiring computational speed and memory capacity to process large arrays of numbers. For this reason, many innovations in geophysics have often paralleled developments in computer technology. In the early years, large mainframe computers were used to perform calculations of large numbered arrays. With the roll-out of Unix, the geophysical community migrated to the more cost effective platform of desktop workstations for they provided computational speed with the convenience of distributed local access. Recent developments in personal computers have provided geophysicists with the computational speed and capacity to perform these calculations on portable laptop computers. Software vendors have responded as Windows based versions of Unix-coded programs were made available to the industry.

The geological community did not require the expense associated with the computational speed of the mainframe computers or the Unix platform. Geologists working with well-log data required access to digital log databases to build cross-sections and to analyze the properties of various rock formations. As such, their preferred hardware platform was the personal computer as it was the least expensive platform. Such hardware disparities between geologists and geophysicists hindered the sharing of information between the two disciplines. For years, the industry solution was to contour the resultant maps similarly, using the human factor to correlate and integrate the two data sets. Some software vendors responded by providing geological functionality within new modules designed for Unix-based geophysical workstations while other vendors developed geophysical interpretation software for the PC platform. As oil and gas companies requested enhanced file export and import capabilities, so these companies began the process to integrate data and share information between geologists and geophysicists. They began to link and network software packages into a multi-disciplinary digital workflow that combined knowledge from various disciplines. A push for standards ensued. Industry standards began to evolve that supported generic file import and export structures across different operating systems. This further assisted data transfer and data sharing.

This has lead to the information technology environment that we find ourselves in today. Oil and gas companies are migrating to common operating platforms with common operating systems. The Windows NT or Windows-based personal computer, more commonly available as a laptop computer, is the preferred platform of choice. Companies with considerable capital investments in Unix based technology are delaying their migration to the PC environment until the functionality of this platform fully mimics and emulates the computational speed and capacity of the Unix-based environment.

What is Geo-Science Data Architecture?

Geo-science data architecture is the design of geological, geophysical and petrophysical information workflows and the interaction of databases and various application software packages to facilitate data interpretation and ultimately the retention of the knowledge derived from the analysis of information. It is the ability to accurately store the data, readily access it, derive information from it and then retain the knowledge learned.

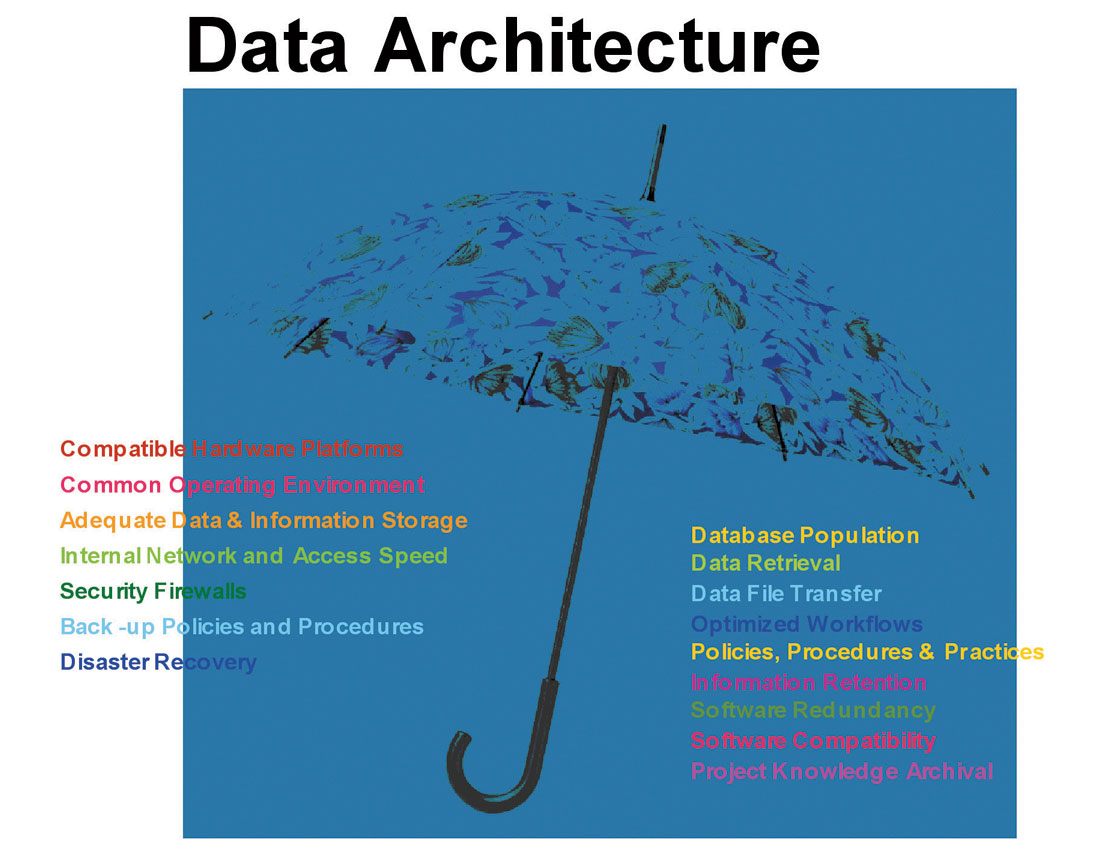

Database management practices will assert responsibility and accountability for the initial capture of data, emphasizing the accurate and complete population of the database. Data architecture is much more than data management. Data architecture is a management system that contains practices, policies and procedures that facilitate this progression or workflow. Data architecture is an umbrella that spans numerous IT issues as illustrated in Figure 1. It encompasses data management, hardware and software issues, operating systems, system security and back-up, information analysis, knowledge retention, result presentation, system integration, data file transfer and the like. It is a context that re-frames IT issues and captures the breadth of issues associated with facilitating data workflows for the end-user.

Managing Your Digital Data Workflows

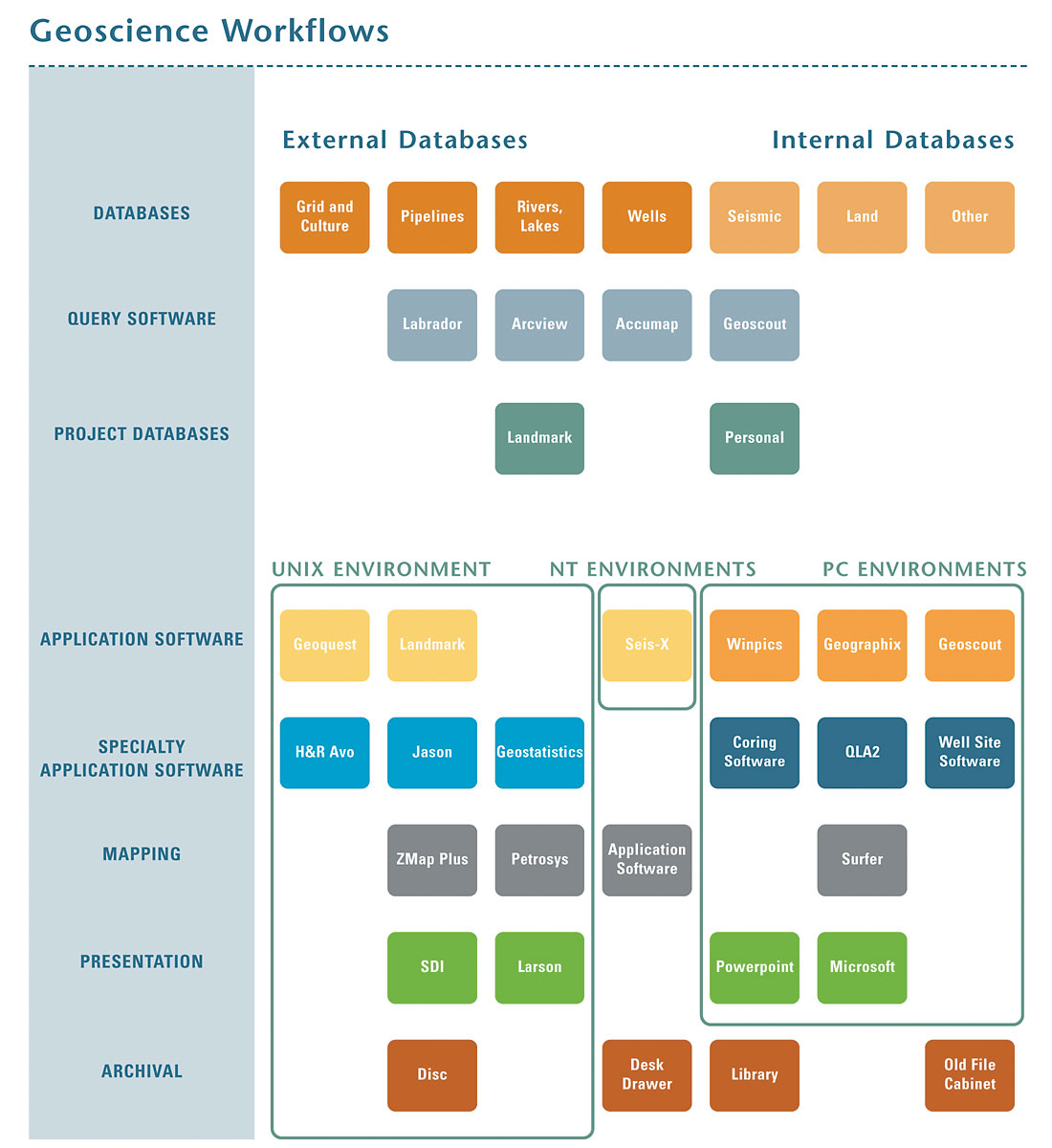

To fully appreciate the concepts of data architecture, it is imperative to follow the data through its path and evolution into information and ultimately knowledge. A flow chart such as the example shown in Figure 2 can be used to roadmap this progression. The process begins with the database as it is the foundation upon which the process is built. Accurate and complete database population becomes essential to the process as any errors, inaccuracies or poor data input practices quickly nullify the integrity and value of the data source. Databases can be registered and supported internally within an organization or they can be accessed externally through some form of subscription fee service. The obligation to populate the database resides with the database owner. Over the decades, oil and gas companies have invested considerable amounts of capital to acquire proprietary data so as to mitigate the drilling risk associated with finding and producing hydrocarbons. One would anticipate that this data is dutifully catalogued and archived in a database with seemingly religious vigor but this is seldom the case. Oil and gas companies are not in the database management business, they are in the business to find and produce hydrocarbons. Numerous service companies have attempted to fill this data management void by providing access to public data such as well-logs, map grid files yet some data such as land entitlement and seismic data coverage are unique and proprietary to each individual company, requiring the oil and gas company to assume their own responsibility for accurate database population. Never has this plight been more evident than when an oil and gas company wishes to divest itself of a property or asset, often having to audit their records to clarify entitlement prior to divestiture. Hence for much of the 1990s, service companies preached the merits and benefits of practicing good data management principles.

Whether the database is located internally or offsite, oil and gas companies most typically access geo-science data through a Geographical Information System (GIS) browser. The browser can communicate with various hubs at once in order to access the data requested. Numerous industry browsers exist. Sometimes the data is then placed into a project database prior to being loaded into an application software package. Data begins to become information at the application software stage. At this stage, geo-science professionals use various application software packages to make interpretive judgments regarding their data. Often the technical problem demands a higher level of technical rigor, so a specialty application software package can be utilized. The resultant maps that geo-scientists create depict their knowledge and understanding of the subsurface features. The knowledge is often presented in a meeting, sometimes captured in a memo, report or poster-like montage. The natural progression depicted in Figure 2, is for data to evolve into information through technical analysis and for knowledge result.

Many things inhibit this ideological solution. Different hardware platforms can make it difficult for software packages to transfer data or information. Operating system driven file structure, vendor specific formats and file re-formatting can make a simple exercise take days to accomplish. Vertically integrated software that accesses this data and facilitates the data analysis to create information, resulting in increased knowledge, is often perceived as a huge advantage to the end-user, however added functionality in a competing software package often entices a “best of b reed” solution which spurns the multi-vendor solution problem. Industry standard groups such as POSC and PPDM attempt to provide a common data model for the development of software, nomenclature and file formats. Software vendors have been trying to make life simpler by providing numerous file import and export formats. Now that PC based hardware possesses such computational speed, migration to this platform is now occurring.

Carr contends that once a technology becomes more common, the competitive advantage has diminished. Workstations were once reserved for the domain of the large oil and gas companies. Today, junior companies can access the necessary technology on a PC platform for a fraction of the cost of just a few years ago. Carr would conclude that any competitive advantage had either disappeared or diminished appreciably. However, Brown asserts that new practices, policies, and procedures must be put in place to truly leverage information technology. If this is accomplished, then competitive advantage is restored. This is essentially the stage we find ourselves in today. Streamlining workflows within an organization will lead towards less confusion, faster cycle times, higher productivity and less frustrated personnel. Removing software redundancy and the need to support multiple solutions will simplify things appreciably. Just think of the technologist who has to support 3 or 4 geologists or geophysicists who all wish to do the same thing but on different software. The technologist often has to learn numerous vendor specific file formats to support a team of professionals. Such a situation is hardly efficient. Brown asserts that competitive advantage can be restored to the situation. Those who learn how to manage these problems and drive end-user based solutions will succeed. However, a lack of talent to do this currently exists as a seasoned multi-disciplinary approach is required combined with a basic understanding of the IT world. In other words, an architect is required to design the house that everyone is to build! The data architect is the one that provides an understanding of the IT world and what is possible. The architect also knows what the end-user or consumer wants! Whether the architect is designing houses or digital data and information highways, the role is essentially the same. Within the oil and gas industry this involves designing workable solutions compatible with today’s business unit structures. Competitive advantage can be achieved by those who accomplish this feat first as these organizations will be more productive, more responsive and more cost effective than the competition.

A mind shift however is required to achieve this success. Reflecting back upon the title of this paper, one needs to wonder who is in control and driving the solutions. The solutions need not be based upon “bleeding edge” technology. If the end-user is driving the solutions with the aid of expert architects, then a practical, meaningful and value added solution will result. Harnessing your IT capabilities rather than being a victim of ever evolving technology is the key to competitive advantage.

Conclusions

Information technology (IT) is fast becoming more common. While some people may believe that the competitive advantage associated with IT is mitigated due to this ubiquitous availability, competitive advantage is maintained by those who harness the technology currently available. By adopting new business solutions, practices, policies and procedures, digital data workflows can be stream-lined to better support geo-science professionals and technologists. Various IT issues such as hardware, back-ups and common operating environments need to be reviewed more holistically with various software programs, data management and knowledge archival in order to fully appreciate the concept of data architecture. By applying this perspective for data architecture, competitive advantage is not only maintained but it becomes a strategic advantage.

Join the Conversation

Interested in starting, or contributing to a conversation about an article or issue of the RECORDER? Join our CSEG LinkedIn Group.

Share This Article